Author(s): Ankit Chandankhede

Functional coverage is the most important metric in design verification and writing the functional coverage accurately needs skills and is manual process. Moreover, hitting different complex cross functional cover points requires directed complex testcase. Deploying AI to write the cover points and writing sequences to hit complex scenarios allows easier test writing and converging on functional coverage for complex architectures such as graphics-purpose unit GPU and AI accelerators. Script also allows to create a high verification plan and analyze effectively. This HVP plan can also be used across DUTs that allows to measure effective coverage across different levels of verifications abstractions.

Functional verification has paramount importance in ensuring the completeness of the testing of today's advanced semiconductor designs, particularly in cutting-edge technologies like Graphics Processing Units (GPUs) and AI accelerators. Maintaining and delivering high quality function design by meeting performance standards for such systems which are powering modern computational tasks on phones, servers, gaming for rendering and recently inference and training of AI models.

Labor intensive testing platforms for such complex architecture has been a bottleneck and is subject to human errors on either interpreting the specification of architecture or missing out on critical corner case scenarios. Test planners in such an environment must define each functional cover point across different features, tabulating a series of scenarios and potential security scenarios from hacker’s perspective. This is followed by coding the functional coverage meticulously through painstaking manual effort often needs expertise in coding such complex cover points and further craft the test cases. This demands immense engineering effort and prolonged development and testing cycles. Often such processes may hit pitfalls in execution, resulting in delayed product launch and missing market opportunity.

Complexity of modern computer architectures such as GPUs and AI accelerators, which encompasses numerous new features, data paths, newer set of instructions in kernel and new commands for processing a workload. Verification plays an important role in identifying design bugs and reverifying the bug fixes through comprehensive testing. Functional coverage provides a direct correlation between testing environment, test plan and design health.

Considering aforementioned challenges, deploying artificial intelligence (AI) and automation through scripting to efficiently converge functional coverage offers ease on complex testing requirements and minimizes human error.

This paper underscores the importance of AI-driven and automation methodologies that addresses complexities of functional verification in complex architectures. It presents a framework for creating a structured test plan including cover points, converting these cover points from a table to system verilog functional coverage code, mapping coverpoint in structured high verification plan (HVP) in terms of feature, priority, and unit coverpoints. This automation streamlines the most pivotal metric of the verification process, thus optimizing coverage writing and analyses of functional coverage for quicker convergence to ensure high quality design.

Details in subsequent sections of this paper provides in depth understanding of automation of structured coverpoint scenarios, creating coverpoints, reanalyzing results using AI model from synopsys, HVP mapping and real time feedback to current test constraints. Additionally, the paper highlights other bottleneck facets of verification methodology, their impact and potential solutions.

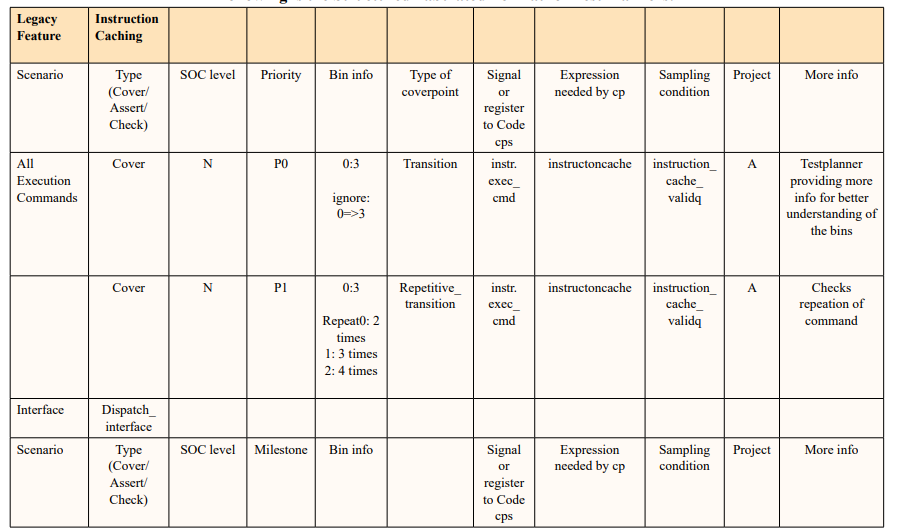

Test planners are provided with specific format of tabulating the scenarios from testplan with specific priorities, features and sampling conditions. Scripts are fed with this specific tabulated format to create cover points such as explicit bins, transitional bins, reputation bins, wildcard bins, ignore bins, illegal bins and cross coverage bins. Moreover, the script is scaled to parse the protocol and packet information to generate automated coverages and hence can be hooked to interfaces which follow a particular protocol. Thus, illuminating the burden of the exhaustive manual process of creating the cover points and enhancing the overall efficiency in verification.

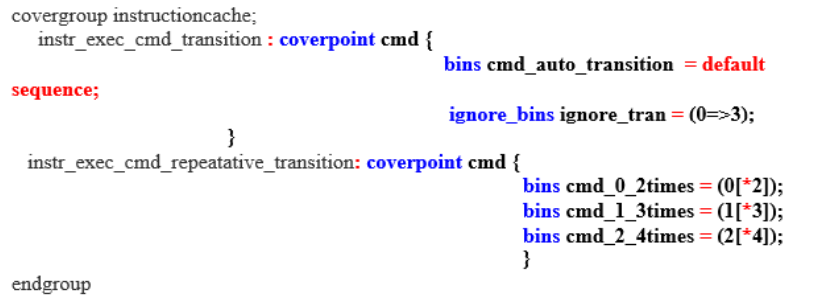

Column of type of coverpoint is used by the coverage coding script to specific create cover points. Script parses bins info for the values for creating the bins over the signals from the column of signals or register code cps. Following is example of coverpoint created.

Name of the covergroup is taken from the scenario column, coverpoint is created based off of type of coverpoint and name of signal and bin name is created based off the type of bins

Transition Coverpoint : Transition coverpoints are often used to check the transitions of finite state machines (FSM), change of virtual channels, transition of instruction or data type command, high priority to low priority cycles, different sources going into arbiters [1]. Such transition coverpoints exposes the corner case scenarios and hard to code. Hence the approach deploys parsing of signal with specific values mentioned in bin_info and creates a default *_auto_transition bins and creates different bins to be ignored, this way all transitions are covered except the ignored or illegal transitions . Repetitive cover points are also defined based off of number repetation expected from a particular value on a signal [2].

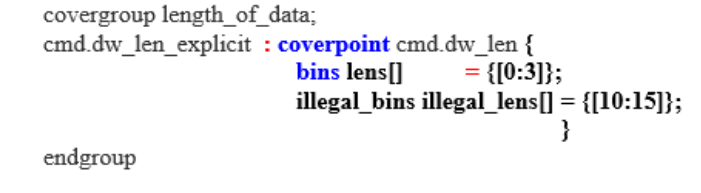

Implicit Coverpoint: Script determines the value of the implicit bins are taken from the bin info and signal to create cover point is taken from the Signal or register to code column.

Note: Illustrated example shows the implicit coverpoint created on a signal from an interface however implicit coverpoint can be created on any signal [2].

Likewise different type of cover points are generated through this script based on the table filled by the test planner and hence eliminating the coding cycle of such functional coverage and multiple compile process as these cover points are guaranteed to compile successfully.

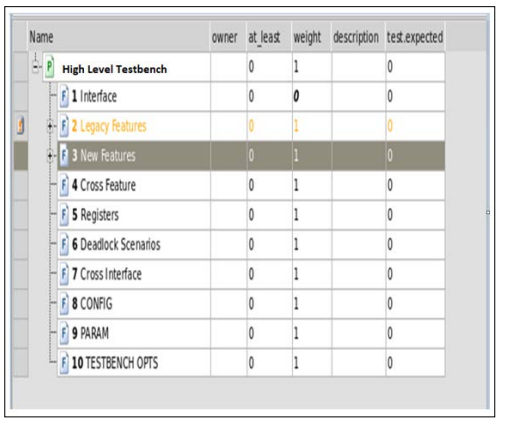

High Verification plan is used for analyzing coverpoints in more structured way and is often mismanaged due to nature of coverpoints are not categorized properly and hence prioritizing coverpoints to be analyzed and focus on becomes challenging. Structured HVP plan is created by this automation script approach which relies on the table 1 as mentioned above. HVP plan add all the scenarios mentioned in the table based on the type of feature, units that it belongs to and priority. This allows the functional coverage analyzing engineer to effectively manage and focus based on the highest priority, feature and complex scenario such as cross feature and deadlock scenarios.

Script is also filters out the scenarios which are not applicable to a project based on the column project. If the entry related to this column is empty, script considers this scenario to be included by default to all projects.

The first entry in table defines the type of cover group/ cover point Interface:

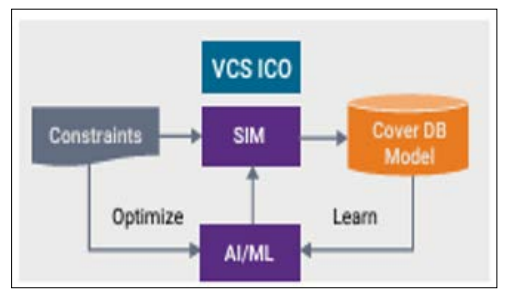

Analyzing coverpoints and likewise identifying the under or over constraint are difficult and prolonged engineering process. Synopsys tool is deployed for such analysis by feeding back the coverage generated after the regression of test suites. This tool identifies different under constraints from stimulus and coverage report and hence exposing the test sequence gaps and optimizes the stimulus generation which in turn improve test suite quality and early convergence of functional coverage.

Figure 1 : Constrained Random Verification with ICO

Usually the verification team included multiple engineers. Often test plan and test writers or sequence writers are different and hence synchronization across team members in test development or functional convergence is warranted. This structured table provides in-depth understanding to test write from test planners perspective and hence fewer iteration of feedback and resulting in better communication between test planner and test writers.

Case Study: Application Across Different Projects and DUTs Case study using this approach shows faster cycle of creating bug free coverpoints from test planning stage, saving about 85% of coding efforts on cover points. Very complex and cross IP coverpoints are bit challenging to be created through such automation and hence is manual process. However relieving effort on creating certain complex coverpoint through such automation allows verification engineer to focus on greater complexity of coverpoints.

Structured HVP has been key in efficient analysis of functional coverage and has helped verification engineer to focus on high priority coverpoint and hence effectively close on coverpoints quickly.

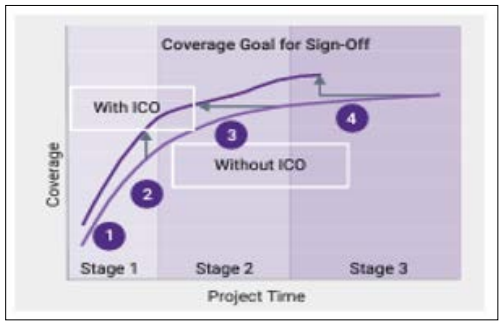

Further AI tool from Synopsys provides additional assistance to verification to engineers in identifying the under constraint and also automatically creating stimulus to hit certain coverpoints [4]. Automated stimulus from Synopsys has also helped to find corner case scenarios specifically in cache hit-miss , arbiters and command execution in execution scenarios in GPUs and AI accelerators.

Figure 2 : Effects of ICO on Coverage Convergence

The integration of automation and AI tool from Synopsys in functional verification improved efficiency, significantly enhances the efficiency and accuracy of coverage-driven verification processes for complex semiconductor designs. By automating the creation of functional coverage and HVPs, this approach not only accelerates verification cycles but also improves overall design quality and reliability.

Future research directions include on expanding the capability to create sequences based on the planned coverpoints using AI which allow verification engineer to reduce the test developing cycle and focus more on the complex nature of testcases if there be any missing. Further optimizing current scripts to handle more complex coverpoint and better structure of HVP across different type of architectures seems a promising endeavor. Additionally, exploring AI’s capability to debug the failure cases to assist verification engineers on gruesome debug cycle remains a potential to be explored.