Author(s): Abhishek Shukla

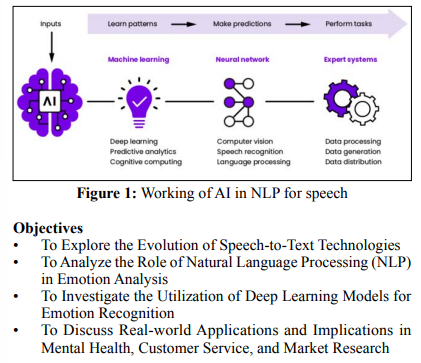

Artificial Intelligence (AI) and Machine Learning (ML) technologies have revolutionized various domains, and one of their fascinating applications is in analyzing human emotions through speech-to-text data conversion. This article delves into the innovative use of AI and ML to process spoken words into text and subsequently evaluate human emotions embedded within the speech. We explore the development of speech-to-text technologies, the essential function of natural language processing (NLP), and the use of deep learning models to understand emotional cues. Our research discoveries illustrate how these technologies are altering fields like mental health, customer service, and market research, granting deeper understanding of human emotional states. By scrutinizing emotions conveyed in spoken language, artificial intelligence (AI) and machine learning (ML).

In recent times, the convergence of cutting-edge technologies, namely Artificial Intelligence (AI) and Machine Learning (ML), has given rise to transformative breakthroughs across diverse industries. Among the myriad of pioneering developments that have emerged, one particularly fascinating application revolves around the profound understanding and analysis of human emotions, achieved through the conversion of spoken language into text. This article endeavors to shed light on the substantial potential of these technologies in unraveling the intricate tapestry of human emotions concealed within spoken words.

In the pursuit of understanding and analyzing these emotions, AI and ML play a pivotal role. They serve as the bridge between the spoken word and the unspoken emotional context. While this technological marriage may appear to be a recent phenomenon, its roots extend deep into the history of human-computer interaction and linguistic analysis [1].

The journey towards decoding human emotions from spoken language is a multidimensional one, encompassing several layers of complexity. It requires not only an accurate conversion of spoken words into text but also an intricate understanding of linguistic structures, sentiment analysis, and the dynamics of emotional expression. Each of these layers contributes to the holistic process of emotional analysis [2].

The availability of vast datasets and improvements in hardware infrastructure further fueled this transformation. These datasets, containing a diverse array of spoken language samples, enabled AI models to adapt and learn from a rich tapestry of linguistic diversity. The result was a quantum leap in the accuracy of speechto-text conversion.

But converting spoken language into text is just the first step in this intricate dance of understanding emotions. The true magic unfolds when we turn our attention to Natural Language Processing (NLP), a critical component of the emotional analysis process [3].

NLP is the bridge that connects the words on a page to the emotions within. It encompasses a wide array of techniques and methodologies designed to extract meaning, sentiment, and emotional cues from text data. At its heart, NLP seeks to unravel the layers of context, semantics, and sentiment that are woven into the fabric of human language [3].

In the context of emotional analysis, NLP plays a pivotal role in several key areas. First and foremost is the extraction of sentiment. Sentiment analysis models, often hinging on deep learning architectures such as Long Short-Term Memory (LSTM) networks, are deployed to discern the emotional tone of text data. These models can categorize text into positive, negative, or neutral sentiments, providing a foundational understanding of the emotional context [4].

Yet, emotions are far from binary; they are a rich tapestry of feelings that go beyond mere positivity or negativity. This is where the true complexity of NLP-driven emotional analysis emerges. NLP models are trained to recognize a spectrum of emotional states, from happiness and sadness to anger and fear. Lexicons and sentiment analysis tools aid in associating specific words and phrases with corresponding emotions, allowing for a more nuanced understanding [4].

Contextual analysis forms another vital facet of NLP-driven emotional analysis. Conversations are not isolated sequences of words; they are dynamic exchanges where emotions ebb and flow. NLP models excel at contextual analysis, tracking how emotions evolve throughout a conversation, uncovering the emotional journey of the speaker. This temporal dimension adds depth and richness to the emotional analysis, akin to reading the emotional chapters of a person's life.

As we journey deeper into the realm of AI and ML-driven emotional analysis, it becomes evident that these technologies have transcended the realm of theoretical possibilities. They are making a tangible impact on our daily lives, infiltrating diverse domains and reshaping the way we interact with technology and with each other [5].

In the realm of customer service, the impact of AI-driven emotional analysis is equally profound. Companies are leveraging this technology to assess customer satisfaction and detect dissatisfied customers in real-time. Imagine calling a customer service hotline and having the system not only address your concerns but also gauge your emotional state throughout the conversation.

If the system detects frustration or dissatisfaction, it can prompt a human customer service representative to intervene, ensuring a positive customer experience. This real-time emotional feedback loop has the potential to elevate customer service to unprecedented levels, fostering customer loyalty and satisfaction [1].

Market research is yet another domain where AI-powered emotional analysis is making waves. Companies are using these technologies to gauge consumer reactions to products, advertisements, and brand messaging. By analyzing the emotional responses embedded within consumer feedback, businesses can gain

This article addresses the following research questions to achieve the outlined objectives:

Speech-to-text technologies have undergone a remarkable evolution over the years. Initially, these systems were limited in accuracy and efficiency, primarily due to challenges in recognizing various accents, dialects, and speech impediments. However, advancements in AI and ML have revolutionized this field [2].

In the early days, the accuracy of speech-to-text systems left much to be desired. Conversations transcribed by these systems often bore the scars of misinterpretation, with errors and inaccuracies riddling the converted text. Accents, with their diverse cadences and intonations, proved to be formidable adversaries for the rudimentary algorithms at play. Dialects, rich in their regional intricacies, further confounded the efforts to attain reliable transcription. Additionally, the presence of speech impediments, ranging from stutters to lisps, introduced yet another layer of complexity into the equation [4].

Yet, despite these formidable challenges, the human spirit of innovation pressed on. Technological pioneers recognized the vast potential of speech-to-text conversion and set out on a journey of relentless exploration and refinement. The result of this tireless pursuit was a profound transformation that reshaped the very essence of speech-to-text technologies [5].

The availability of extensive datasets, comprising diverse linguistic patterns and variations, has provided the nourishment necessary for these algorithms to evolve. With each new data point, the algorithms refine their understanding of complex sentence structures, nuances in pronunciation, and contextual intricacies. This ceaseless learning process has been instrumental in elevating the accuracy and reliability of speech-to-text conversion to unprecedented heights.

The hardware ecosystem supporting these algorithms has also undergone substantial enhancements. The exponential growth in computing power, often driven by leaps in processor technology, has enabled these deep learning models to flex their cognitive muscles. The intricate calculations and pattern recognition tasks, once deemed computationally intensive, have now become routine operations within the realm of these advanced algorithms. This symbiotic relationship between software and hardware has unlocked the true potential of modern speech-to-text engines [4].

These cloud-based services serve as beacons of possibility, offering developers a toolkit replete with the power to harness the capabilities of highly accurate speech-to-text conversion. They provide the infrastructure and resources required to seamlessly integrate speech recognition capabilities into a myriad of applications and services.

In this ever-evolving landscape, the implications of these advancements are profound. From healthcare to education, from entertainment to accessibility, the transformative potential of accurate speech-to-text conversion knows no bounds. The barriers that once hindered effective communication have been dismantled, opening doors to inclusion and understanding [2].

Role of Natural Language Processing (NLP) in Emotion Analysis To delve deeper into emotions, NLP models are trained to recognize specific emotional states such as happiness, sadness, anger, and fear. Lexicons and sentiment analysis tools aid in associating words and phrases with corresponding emotions. Additionally, contextual analysis helps in understanding how emotions evolve throughout a conversation, providing valuable insights into the emotional journey of the speaker [3].

Utilization of Deep Learning Models for Emotion Recognition Deep learning models have revolutionized emotion recognition from speech-to-text data. Recurrent neural networks (RNNs) and transformers have demonstrated remarkable capabilities in capturing subtle emotional nuances in spoken language [3].

Emotion recognition models are trained on vast datasets containing transcribed conversations with associated emotion labels. These models learn to identify emotional cues from linguistic patterns, voice intonation, and speech cadence. As a result, they can categorize text into various emotional states accurately

The role of Natural Language Processing (NLP) in the analysis of emotions from text data is pivotal, offering a profound understanding of the intricate nuances hidden within written language. This essential component of emotion analysis relies on a blend of linguistic expertise, computational algorithms, and an acute understanding of human expression. In essence, it bridges the gap between words on a page and the profound emotions that underlie them, enriching our comprehension of the human experience.

In this journey of unraveling the emotional tapestry of text, NLP serves as our guide. It empowers us to peer beyond the surface of words and sentences, delving into the sentiments and emotions that infuse written language. At its core, NLP is the art of understanding human language - the patterns, the semantics, and the unspoken nuances that define our communication [3].

To grasp the essence of NLP's role in emotion analysis, let us embark on a voyage through its intricate layers.

One of the foundational pillars of emotion analysis within NLP is sentiment analysis. This practice involves discerning the overall emotional tone of a piece of text, be it positive, negative, or neutral. Sentiment analysis models, which form the backbone of this endeavor, are often anchored in deep learning architectures like Long Short-Term Memory (LSTM) networks.

Emotion analysis can aid mental health professionals in monitoring patients' emotional well-being remotely. It helps identify signs of depression, anxiety, or other mental health conditions through conversational cues.

Companies are using emotion analysis to assess customer satisfaction and detect dissatisfied customers in real-time. This allows for immediate intervention and improved customer experience.

Emotion analysis in market research helps companies gauge consumer reactions to products, advertisements, and brand messaging. It provides invaluable insights for product development and marketing strategies [2].

In marketing and customer service, NLP helps companies gauge consumer reactions to products, advertisements, and brand messaging. By understanding the emotional responses embedded within customer feedback, businesses can tailor their strategies to resonate with their target audience's emotions.

In literature and content analysis, NLP enables scholars and researchers to delve deeper into the emotional undercurrents of texts. It enriches our understanding of literary works and cultural artifacts by uncovering the emotions woven into their narratives.

For implementing these strategies these steps will be followed based on the research

According to this, use established Speech-to-Text engines or APIs like Google Cloud Speech-to-text, IBM Watson Speech to text, and Microsoft Azure Speech Services. All these services can easily convert spoken words into text [1].

For this, it is reliable to choose a reliable SST engine or API that is supporting real-time processing. Furthermore, also provide accurate transcriptions without any error.

Implement various NPL techniques to process and analyze the converted text. Also used trained sentiment analysis models for training models on emotion-labeled datasets. Due to this, it is possible to understand the emotional content within the text [2].

For this, utilize NLP libraries like spaCy for tokenization, sentiment analysis, and part-of-speech tagging.

Apply some deep learning models like recurrent neural networks, or short-term memory networks. Through this, it is possible to capture the nuances of emotional cues in speech. Also, train the models on a diverse dataset for increasing accuracy [3]. Therefore, TensorFlow an important deep learning framework can be a solution. also deploy emotion recognition models, and PyTorch can be used too [3].

Choose a proper STT engine that aligns with your application requirements by considering various factors like language support, accuracy, and real time processing [4].

After selection, integrate the STT engine with relative applications with this API. Secondly, implement error handling mechanism for addressing any variations in background noise, clarity, and speech.

Implement popular NPL libraries like spaCy, NLTK for performing tasks like sentiment analysis, tokenization and stemming on the converted text [5].

It can be done through developing a pipeline that preprocesses the transcribed text, and applies NLP techniques and extract all emotional features. Secondly, explore pre-trained models for emotions train custom or detection models through the help of labeled emotional datasets.

These deep learning models can be implemented by designing a neural network architecture, by considering the complexity of emotional nuances. Also, train the model on a diverse dataset by incorporating different speech emotional expressions and patterns.

There are three important limitations of NPL approach. From this, the first one is context understanding. The NPL models may struggle with understanding context, especially during conversations with changing topics. It can be mitigated by implementing contextual embeddings or context-aware models such models that only consider the history of conservation for more accurate emotion recognition.

The second one is related to contextual and cultural variations. Most of the time emotions can be expressed differently across cultures and context and it will become extremely challenging for NLP models to interpret accurately emotional nuances. This limitation can be minimized by training models on diverse datasets that includes samples taken from different contextual and cultural backgrounds. Secondly, incorporate cultural context features for enhancing the cross-cultural adaptability of the model. The NPL models are facing problems in recognizing irony and sarcasm and it may lead to misinterpretation of emotions. It is possible to overcome by integrate sentiment analysis models. These models are specifically trained for identifying any sarcastic or ironic language. Also, by implementing advanced linguistic analysis, it will become simple to detect cues indicative of sarcasm or irony [5].

There are some innovative solutions to mitigate these challenges. The first solution is related to context-aware models. If contextaware models are developed, then they will consider the conversation’s overall context to better understand and interpret emotional cues. These models can be implemented by utilizing recurrent neural networks or transformer-based models that are capturing sequential context in conservations.

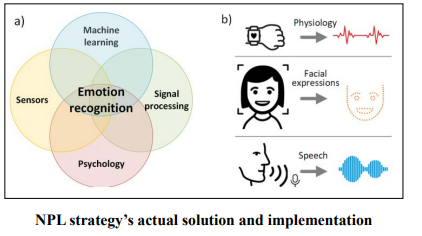

The next solution is Multimodal approach. In this approach, speech analysis will be combined with facial expression recognition and other multimodal inputs. Due to this, it is possible to provide a comprehensive understanding of emotions. It can be implemented through computer vision models for facial emotion recognition.

These models fuse the results with the speech-based emotion predictions.

The third solution is related to continuous learning. Therefore, implement continuous learning mechanism for updating models with new data, and ensure adaptability to evolving emotional and linguistic trends. It can be implemented through online learning techniques for adapting the model to change its linguistic patterns, and expression with time.

Lastly, implement cross-cultural training by training models on diverse dataset that represents different cultural and contexts for improving the ability of model to recognize and interpret emotions accurately across various scenarios. Moreover, it can be implemented through collecting and labelling data from various cultural backgrounds and ensure that the model generalizes well across various emotional expressions and linguistic patterns [4].

The fusion of AI and ML technologies with speech-to-text conversion has opened new horizons in understanding and analyzing human emotions from spoken language. The evolution of speech-to-text engines, the role of NLP, and the utilization of deep learning models have collectively propelled this field forward.

In the real world, AI-driven emotion analysis is making a significant impact in areas such as mental health, customer service, and market research. By deciphering the emotional cues within spoken language, these technologies are enhancing decision-making processes, improving customer experiences, and contributing to overall human well-being

As AI and ML continue to advance, the accuracy and applicability of emotion analysis from speech-to-text data will further expand, leading to even more profound insights into human emotions and their implications across various domains.