Utilize AI Algorithms to Generate Realistic and Diverse Test Data Sets for API Testing

Author(s): <p>Maheswara Reddy Basireddy</p>

Abstract

API testing is a critical aspect of software development, ensuring that application programming interfaces function correctly, reliably, and securely. Generating diverse and realistic test data sets is essential for thorough API testing, encompassing various data types, constraints, and edge cases. Leveraging AI algorithms presents a powerful approach to automate the creation of such data sets, improving efficiency and coverage while mimicking real-world scenarios.

This paper explores methodologies for utilizing AI algorithms in generating test data sets for API testing. It delves into techniques such as rule-based generation, machine learning models, natural language processing, and numerical data generation, each tailored to specific data types and constraints. Additionally, considerations for data augmentation, edge case testing, and scalability are discussed to enhance the effectiveness of the generated data sets.

Furthermore, the paper emphasizes the importance of a feedback loop to refine the generated data sets based on API responses, ensuring continuous improvement in realism and diversity. It also highlights the validation and quality assurance processes necessary to verify the suitability of the generated test data for comprehensive API testing.

Overall, this paper provides insights into how AI algorithms can be harnessed to generate realistic and diverse test data sets for API testing, ultimately enhancing the quality, reliability, and security of software applications.

Introduction

In modern software development, Application Programming Interface (API) testing is a crucial component ensuring the functionality, reliability, and security of software systems. APIs act as bridges between different software components, facilitating communication and data exchange. Testing these interfaces involves validating various input-output scenarios to ensure that the API behaves as expected under diverse conditions.

Central to effective API testing is the availability of realistic and diverse test data sets that encompass a wide range of data types, constraints, and edge cases. Generating such data manually can be time-consuming, error-prone, and may not cover all necessary scenarios. Leveraging Artificial Intelligence (AI) algorithms presents a promising solution to automate the generation of test data, improving efficiency and coverage while mimicking real- world scenarios.

This paper explores methodologies for utilizing AI algorithms in generating test data sets for API testing. It delves into techniques such as rule-based generation, machine learning models, natural language processing, and numerical data generation, each tailored to specific data types and constraints. Additionally, considerations for data augmentation, edge case testing, and scalability are discussed to enhance the effectiveness of the generated data sets.

Furthermore, the paper emphasizes the importance of a feedback loop to refine the generated data sets based on API responses, ensuring continuous improvement in realism and diversity. It also highlights the validation and quality assurance processes necessary to verify the suitability of the generated test data for comprehensive API testing.

Overall, this paper provides insights into how AI algorithms can be harnessed to generate realistic and diverse test data sets for API testing, ultimately enhancing the quality, reliability, and security of software applications.

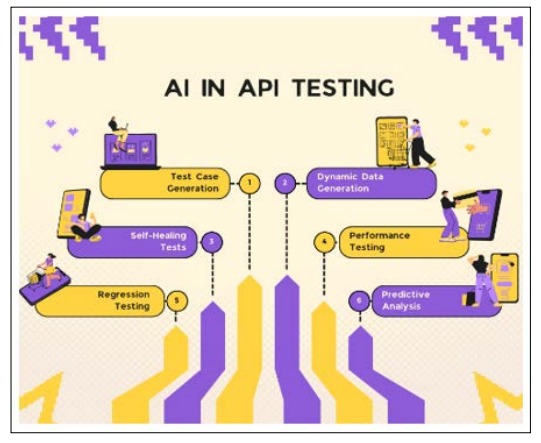

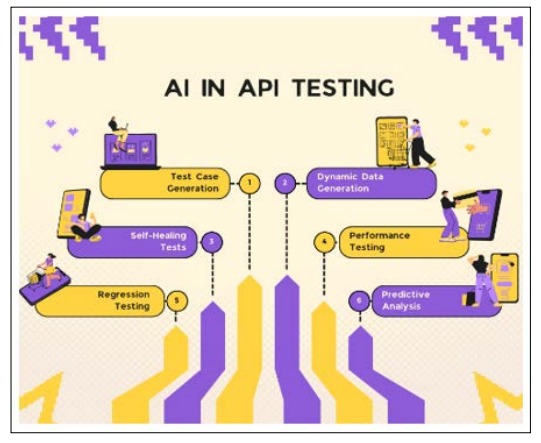

Importance of AI algorithms in API Testing

AI algorithms play a pivotal role in API testing due to their ability to automate the generation of realistic and diverse test data sets. Here are some key reasons highlighting the importance of AI algorithms in API testing:

- Efficiency: AI algorithms can significantly enhance the efficiency of API testing by automating the generation of test Instead of manually creating test cases, AI algorithms can swiftly generate large volumes of data, allowing testers to focus their efforts on other aspects of testing.

- Diverse Test Data: AI algorithms enable the creation of diverse test data sets that cover a wide range of scenarios, including edge cases and unusual This diversity helps uncover potential bugs and vulnerabilities in the API that might go unnoticed with limited or homogeneous test data.

- Realism: By leveraging machine learning models and natural language processing techniques, AI algorithms can generate test data that closely resembles real-world usage This realism is crucial for accurately assessing the performance, reliability, and usability of the API under various conditions.

- Scalability: APIs often need to handle large volumes of data and concurrent requests in real-world AI algorithms can scale to generate the necessary test data to simulate such scenarios, ensuring that the API can handle high loads and maintain performance under stress.

- Adaptability: AI algorithms can adapt to changes in the API's specifications or requirements by learning from feedback during testing. This adaptability allows for continuous refinement of the test data generation process, ensuring that it remains relevant and effective as the API evolves.

- Complexity Handling: APIs can be complex, with various input parameters, data types, and AI algorithms can handle this complexity by intelligently generating test data that meets the API's requirements while covering a wide range of scenarios.

- Quality Assurance: AI-driven test data generation can improve the quality assurance process by providing comprehensive test coverage and identifying potential issues early in the development This leads to higher-quality software products and reduces the risk of defects reaching production environments.

- Timeand Cost Savings: By automating test data generation, AI algorithms can save time and reduce costs associated with manual testing This allows organizations to allocate resources more efficiently and accelerate the overall software development lifecycle.

In summary, AI algorithms are indispensable tools in API testing, offering efficiency, realism, scalability, adaptability, and improved quality assurance. By harnessing the power of AI, organizations can enhance their API testing processes and deliver more reliable and robust software products to their users.

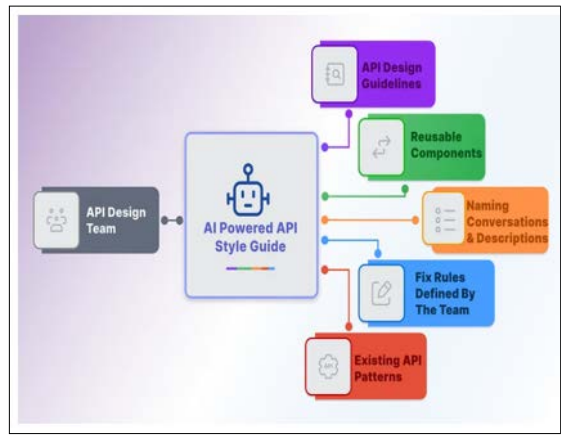

Implementation

The implementation process for utilizing AI algorithms to generate realistic and diverse test data sets for API testing involves several key steps. Here's a structured approach:

Requirement Analysis

- Understand the requirements of the API being tested, including data types, formats, constraints, and edge cases.

- Identify the specific areas where AI-driven test data generation can be beneficial.

AI Algorithm Selection

- Choose appropriate AI algorithms based on the identified requirements and data characteristics.

- Consider factors such as the complexity of the API, available data, and the desired level of realism.

Data Collection and Preprocessing

- Gather relevant data sets for training AI models, including existing API inputs and outputs, if available.

- Preprocess the data to clean, normalize, and format it for training the AI algorithms.

Model Training

Train machine learning models using the collected data sets. This may involve:

- Supervised learning: Training models to generate data that closely resembles real-world inputs and outputs.

- Unsupervised learning: Identifying patterns in the data to generate new test data without labeled examples.

- Generativemodels: Training models like GANs or VAEs to generate synthetic data that mimics real data distributions.

Rule-Based Generation

- Develop rules and patterns for generating test data based on the identified requirements and constraints.

- Implement rule-based algorithms to generate data that adheres to these predefined patterns.

Natural Language Processing (NLP)

- Utilize NLP techniques to generate diverse textual data for API inputs.

- Train language models or utilize pre-trained models to generate realistic text inputs based on the context and requirements.

Numerical Data Generation

- Implement algorithms to generate numerical test data within specified ranges or distributions.

- Consider techniques such as random sampling, probability distributions, or regression models.

Data Augmentation

- Apply data augmentation techniques to increase the diversity of generated test data.

- Techniques may include adding noise, perturbing data points, or introducing variations while preserving semantics.

Validation and Quality Assurance

- Validate the generated test data sets against expected outcomes and quality criteria.

- Test the API using the generated data to ensure that it behaves as expected under various scenarios.

Feedback Loop

- Incorporate a feedback loop to refine the AI algorithms based on testing results.

- Continuously evaluate and improve the generated test data sets based on API responses and real-world usage patterns.

Integration with Testing Frameworks

- Integrate the generated test data sets with existing testing frameworks and tools.

- Automate the execution of test cases using the generated data to streamline the testing process.

Documentation and Reporting

- Document the implemented AI algorithms, including details of the training process and data sources.

- Generate comprehensive test reports detailing the test data generated, API responses, and any identified issues or defects.

By following these steps, organizations can effectively implement AI-driven test data generation for API testing, leading to improved efficiency, coverage, and quality of their software testing efforts.

Use Cases

Here are some specific use cases where AI algorithms can be utilized to generate test data for API testing:

User Authentication API Testing

- Use AI algorithms to generate diverse user credentials (e.g., usernames, passwords) with varying lengths, formats, and

- Test the authentication API with realistic user data, including valid and invalid credentials, to verify login and access control

Image Processing API Testing

- Generate diverse image data sets with different resolutions, formats, colors, and content.

- Test image processing APIs by providing input images with various characteristics, such as different aspect ratios, orientations, and compression levels.

Text Analysis API Testing

- Utilize AI-driven natural language processing (NLP) algorithms to generate diverse text data for testing text analysis

- Generate text inputs with different languages, dialects, styles, and sentiments to test functionalities like text classification, sentiment analysis, and language detection.

Weather Forecasting API Testing

- Generate diverse weather data sets with realistic parameters such as temperature, humidity, wind speed, and

- Test weather forecasting APIs by providing input data covering different geographic locations, weather conditions, and time intervals.

Financial Transaction API Testing

- Use AI algorithms to generate diverse financial transaction data, including transaction amounts, dates, currencies, and transaction types (e.g., deposits, withdrawals, transfers).

- Test financial transaction APIs by simulating various scenarios, such as recurring payments, currency conversions, and fraud detection.

Location-Based Services API Testing

- Generate diverse geospatial data sets with realistic coordinates, addresses, landmarks, and routes.

- Test location-based services APIs by providing input data covering different geographic regions, points of interest, and travel scenarios.

Machine Learning Model Serving API Testing

- Generate diverse input data sets for testing machine learning model serving APIs.

- Test model inference APIs with realistic input data covering various use cases and edge cases encountered in real-world

Social Media Integration API Testing

- Generate diverse social media data, including user profiles, posts, comments, likes, and shares.

- Test social media integration APIs by simulating interactions with social media platforms and validating functionalities such as posting, sharing, and retrieving content.

These use cases illustrate how AI algorithms can be applied to generate diverse and realistic test data sets tailored to specific API functionalities, enabling comprehensive testing and validation of API behavior under various conditions and scenarios.

Limitations

While AI algorithms offer significant benefits in API testing, they also come with certain limitations that need to be considered:

- Data Quality: The quality of the generated test data heavily relies on the quality of the training data and the effectiveness of the AI models. If the training data is biased, incomplete, or of poor quality, it can lead to inaccuracies in the generated test data.

- Model Accuracy: The accuracy of AI models used for test data generation can vary depending on factors such as the complexity of the API, the diversity of the data, and the effectiveness of the training process. Inaccurate models may produce unrealistic or irrelevant test data, leading to ineffective testing.

- Overfittingand Generalization: AI models may suffer from overfitting, where they perform well on the training data but fail to generalize to unseen This can result in test data that does not adequately represent real-world scenarios or edge cases.

- Scalability: Generating large volumes of test data using AI algorithms can be computationally expensive and time- consuming, particularly for complex APIs or datasets. Scalability challenges may arise when scaling AI-driven test data generation to handle the requirements of large-scale testing environments.

- Interpretability: AI-driven test data generation techniques, such as deep learning models, are often complex and opaque, making it challenging to interpret how the models generate test data. Lack of interpretability may hinder trust and confidence in the generated test data.

- Dependency on Training Data: The performance of AI algorithms for test data generation is heavily dependent on the availability and quality of training In domains where labeled training data is scarce or expensive to obtain, it may be challenging to train accurate AI models.

- Ethical Considerations: AI algorithms may inadvertently perpetuate biases present in the training data, leading to biased or discriminatory test data It is essential to address ethical considerations and mitigate bias in AI-driven test data generation to ensure fairness and inclusivity.

- Human Oversight: Despite advancements in AI, human oversight and intervention are still necessary to validate the generated test data and ensure its suitability for testing purposes. Automated test data generation should be complemented with manual inspection and validation to mitigate risks associated with inaccuracies or biases.

While AI algorithms offer promising opportunities for enhancing API testing, it's crucial to acknowledge these limitations and employ strategies to address them effectively. By carefully considering the strengths and weaknesses of AI-driven approaches, organizations can maximize the benefits of AI in API testing while mitigating potential risks.

Conclusion

In conclusion, the integration of AI algorithms into API testing represents a significant advancement in software testing practices. By leveraging AI-driven techniques for test data generation, organizations can enhance the efficiency, effectiveness, and realism of their API testing efforts.

Through the exploration of diverse use cases, it becomes evident that AI algorithms can generate realistic and diverse test data sets tailored to specific API functionalities and industries. Whether it's simulating financial transactions, generating geospatial data, or analyzing textual inputs, AI algorithms offer the capability to create test scenarios that closely mimic real-world usage patterns.

Moreover, AI-driven test data generation contributes to scalability, adaptability, and quality assurance in API testing. With the ability to automate test data generation and adapt to evolving API specifications, organizations can achieve comprehensive test coverage and identify potential issues early in the development lifecycle.

However, it's important to recognize that the successful implementation of AI algorithms in API testing requires careful consideration of factors such as data quality, model accuracy, and validation processes. Continuous refinement and validation of AI-driven test data generation techniques are essential to ensure the reliability and effectiveness of the testing process.

In summary, the incorporation of AI algorithms into API testing holds great promise for improving the quality, reliability, and security of software applications. By embracing AI-driven approaches to test data generation, organizations can streamline their testing efforts, accelerate their software development lifecycle, and deliver better products to their users [1-17].

References

- Yoo S, Harman M, Jia Y (2012) Exploring the limitations of mutation testing: A comprehensive experimental study. IEEE Transactions on Software Engineering 38: 973-987.

- Ammann P, Offutt J (2008) Introduction to software Cambridge University Press https://ebooks.allfree-stuff.com/eBooks_down/Software%20Testing/Introduction%20to%20Software%20Testing.pdf.

- Briand LC, Labiche Y, Shousha M (2015) A systematic and comprehensive investigation of methods to build and evaluate fault prediction models. Journal of Systems and Software 101: 12-32.

- Gotlieb A, Maalej W (2016) Approaches, challenges, and practices in software effort estimation. IEEE Transactions on Software Engineering 42: 1064-1083.

- Harman M, Jia Y (2011) Generating combinatorial test data from models using metaheuristic IEEE Transactions on Software Engineering 37: 193-204.

- Harman M, Tratt L (2007) Pareto Optimal Search Based Refactoring at the Design Level. In Proceedings of the 9th annual Conference on Genetic and Evolutionary Computation (GECCO ’07) 1106-1113.

- Veerappa VN, Harman M (2014) Search-based software engineering: Techniques, taxonomy, tutorial. Journal of Software: Evolution and Process 26: 705-740.

- Ma Y, Miao H, Harman M (2012) Who killed my mutation? Software Testing, Verification and Reliability 22: 243-258.

- Meszaros G (2007) xUnit Test Patterns: Refactoring Test Code. Addison-Wesley https://www.amazon.in/xUnit-Test- Patterns-Refactoring-Signature/dp/0131495054.

- Marick B (1995) The Craft of Software Testing: Subsystem Testing Including Object-Based and Object-Oriented Prentice Hall https://www.amazon.in/Craft-Software-Testing- Object-Based-Object-Oriented/dp/0131774115.

- Fowler M (2007) Mocks Aren't Stubs. martinfowler.com https://www.martinfowler.com/articles/mocksArentStubs.

- Beck K (2003) Test-Driven Development: By Example. Addison-Wesley https://www.amazon.in/Test-Driven-Development-Example-Signature/dp/0321146530 .

- Hunt A, Thomas D (1999) The Pragmatic Programmer: Your Journey to Addison-Wesley https://www.amazon.com/Pragmatic-Programmer-journey-mastery-Anniversary/dp/0135957052.

- Martin RC (2008) Clean Code: A Handbook of Agile Software Prentice Hall.

- ISTQB® Certified Tester Foundation Level International Software Testing Qualifications Board.

- Beizer P (1990) Software Testing International Thomson Computer Press.

- API Testing: The Complete Guide. Postman https://www.postman.com/api-testing-guide/ .

View PDF