Author(s): <p>Premkumar Ganesan* and Geetesh Sanodia</p>

The integration of Artificial Intelligence (AI) with DevOps offers a transformative approach to managing cloud-native infrastructures, addressing the increasing complexity and demands of modern software environments. This paper explores the deep technical aspects of how AI can enhance DevOps practices to create intelligent infrastructure management systems that optimize resource allocation and improve performance. Key AI techniques, including machine learning (ML), deep learning (DL), reinforcement learning (RL), and AI-driven automation, are examined for their role in enhancing infrastructure scalability, reliability, and efficiency. We also provide insights into how these techniques are applied to specific components of DevOps, such as continuous integration and delivery (CI/CD), infrastructure-as-code (IaC), and cloud management. By leveraging AI-driven insights, predictive analytics, and real-time automation, this framework responds to the challenges posed by dynamic cloud-native applications, ensuring efficient, resilient, and scalable cloud environments through automated decision-making and real-time data analysis.

The rise of cloud-native applications, characterized by microservices architecture, containerization, and dynamic scalability, has fundamentally changed how software is developed and deployed. These applications enable rapid development, continuous integration, and frequent updates, which are essential for keeping pace with the demands of the digital marketplace. However, managing the infrastructure that supports these applications has become increasingly complex, especially as cloud-native environments scale dynamically to meet varying demand. Traditional infrastructure management approaches—such as static provisioning and manual configuration—are no longer sufficient for managing modern, scalable systems that require constant adaptation to fluctuating workloads.

AI’s ability to analyze vast datasets, identify patterns, and make predictions in real-time positions it as an ideal technology to complement and extend DevOps practices. AI techniques like machine learning (ML), deep learning (DL), and reinforcement learning (RL) allow organizations to automatically provision and scale resources, optimize performance, and maintain system reliability without human intervention. This paper explores the deep technical aspects of how AI, combined with DevOps, can create an intelligent infrastructure management framework that can adapt dynamically to cloud-native environments, optimize resource utilization, and automate decision-making processes.

The application of AI in DevOps relies on various AI technologies and methodologies that enhance system intelligence, resilience, and automation. Below, we explore these AI technologies in greater technical depth and their specific applications in DevOps.

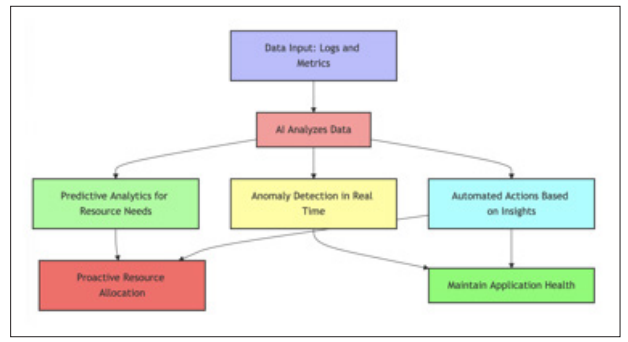

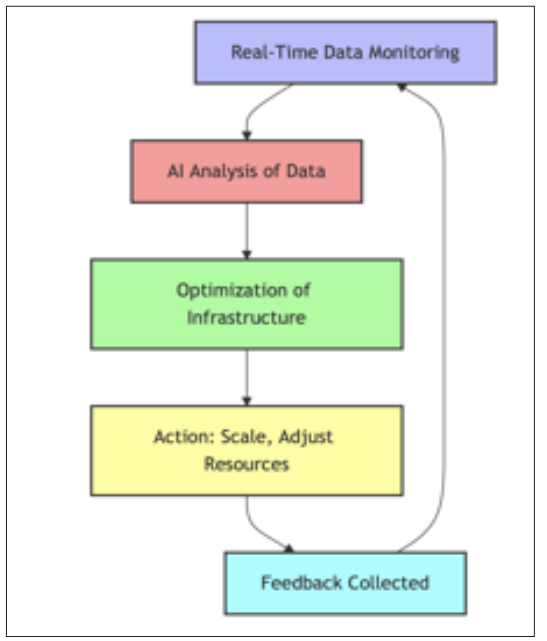

Figure 1: AI- Driven DevOps Process

Machine learning (ML) is the backbone of AI in DevOps, allowing systems to analyze historical data, learn from it, and make informed decisions based on that learning. In the context of infrastructure management, ML can be used for predictive analytics, anomaly detection, and optimizing CI/CD pipelines.

Supervised learning models in DevOps are trained using labeled datasets where the input-output relationships are known. These models can predict future infrastructure requirements, such as scaling resource needs, identifying workload patterns, and forecasting potential bottlenecks. For example, historical data about CPU and memory usage can be used to predict future demand spikes, allowing DevOps teams to preemptively allocate resources [1,2]. Common algorithms include decision trees, support vector machines (SVM), and random forests. In more advanced implementations, neural networks are applied to handle complex relationships between different metrics (e.g., CPU usage, network bandwidth, and user requests).

Unsupervised Learning for Anomaly Detection Unsupervised learning models do not rely on labeled data but instead learn to identify patterns or deviations (anomalies) in unlabeled datasets. In a DevOps environment, unsupervised learning can monitor system logs, network traffic, and application performance to detect unusual behavior that could signal security breaches, performance degradation, or system failures [3]. Clustering algorithms, such as k-means and DBSCAN, are often used to group normal behavior, and anomalies are detected when deviations from these clusters occur.

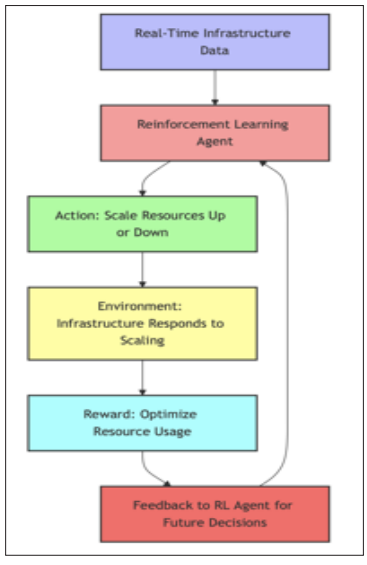

Reinforcement Learning for Dynamic Resource Allocation Reinforcement learning (RL) is an AI technique where an agent learns to make decisions by interacting with its environment and receiving rewards or penalties based on the outcomes of its actions. In the context of DevOps, RL can be used for dynamic resource allocation and scaling. The RL agent learns to balance resource provisioning (e.g., server instances) with performance and cost objectives by continuously refining its strategy through trial and error. RL agents can adapt to fluctuating workloads and optimize system resources over time [4]. RL enhances dynamic resource allocation by enabling real-time, data-driven decisions in infrastructure management. The RL agent analyzes real-time data, such as CPU and memory usage, to adjust resources, scaling up or down based on system needs. Feedback from the environment informs the agent's decisions through a reward system, allowing it to learn and improve over time. This approach optimizes resource usage by balancing performance with cost efficiency, preventing both under- and over-provisioning, making RL a key tool in AI- driven DevOps for efficient infrastructure management.

Figure 2: Reinforcement Learning for Dynamic Resource Allocation

Deep learning (DL), a subset of machine learning, involves neural networks with multiple layers that can model complex patterns in data. DL is particularly useful in infrastructure management for tasks such as advanced anomaly detection, predictive maintenance, and optimization of CI/CD pipelines.

DL models such as autoencoders or convolutional neural networks (CNNs) can analyze complex data streams in real time to detect anomalies [5]. For example, DL models can monitor logs, network traffic, and sensor data to identify subtle patterns that may not be evident through traditional ML methods. Autoencoders, which compress and reconstruct data, are commonly used for anomaly detection because they learn to reconstruct normal patterns, making anomalies easier to detect when reconstruction errors are high.

Recurrent Neural Networks (RNNs) for Time-Series Forecasting In cloud-native environments, time-series data (e.g., CPU usage over time) is critical for understanding infrastructure needs. RNNs, especially long short-term memory (LSTM) networks, are well- suited for time-series forecasting in DevOps. These models can predict future resource requirements based on historical usage patterns, allowing for proactive resource provisioning [6]. For example, RNNs can predict that an application will experience high traffic between specific time intervals based on past behavior, enabling the system to allocate resources in advance.

Reinforcement learning (RL) is one of the most powerful AI techniques for managing dynamic systems in real-time. Unlike traditional ML, which requires static datasets for training, RL agents continuously learn from interactions with their environment. In a DevOps context, RL is highly applicable in:

RL agents can learn policies to autonomously scale infrastructure resources in response to fluctuating demands. Over time, RL agents optimize scaling decisions to balance resource efficiency with performance objectives. For example, an RL agent managing a fleet of microservices can determine the optimal number of instances to deploy at any given time based on real-time metrics such as latency, throughput, and user demand [7].

CI/CD pipelines involve a complex sequence of tasks—building, testing, and deploying code. RL can be applied to optimize these pipelines by learning to sequence tasks in a way that minimizes overall build time and resource consumption [8]. The RL agent might explore different strategies, such as parallelizing certain build processes or caching dependencies, and refine its approach over time to reduce the time to deploy.

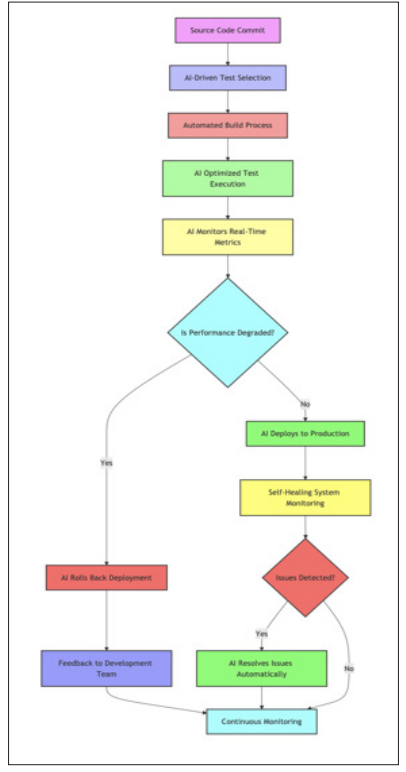

AI-Enhanced Continous Integration and Delivery (CI/CD) Continuous integration and delivery (CI/CD) pipelines are critical components of modern DevOps practices. Integrating AI into CI/ CD pipelines introduces automation and intelligence, streamlining the entire software delivery process. In modern DevOps practices, the integration of AI into CI/CD pipelines has revolutionized the way applications are built, tested, and deployed. The process begins with a Source Code Commit, which triggers AI-driven automation throughout the pipeline. AI analyzes the changes and intelligently selects relevant test cases in the AI-Driven Test Selection phase, reducing the overall testing time while maintaining quality assurance. Once the Automated Build Process is complete, AI Optimized Test Execution ensures that critical components of the application are thoroughly tested. AI continuously monitors real-time performance metrics after deployment to check for any degradation in performance. If performance issues are detected, AI can Roll Back the Deployment, providing feedback to the development team for future iterations. In the case of successful deployment, AI handles Self-Healing System Monitoring, which ensures that any issues are detected and Automatically Resolved. The AI-driven feedback loop allows the system to continuously evolve and improve through Continuous Monitoring. This automated and intelligent process reduces downtime, enhances performance, and accelerates delivery cycles, making CI/CD pipelines more efficient and resilient.

Figure 3: AI-Powered CI/CD Optimization

One of the most time-consuming parts of the CI/CD pipeline is running automated tests. AI-driven test execution frameworks can intelligently select and prioritize tests based on code changes, historical test results, and the impact of those changes on the system. For example, AI can predict which parts of the application are most likely to break based on recent commits, allowing the system to prioritize tests that target those areas, reducing testing time while maintaining high test coverage [9].

In CI/CD pipelines, deployment optimization can be enhanced by AI-driven decision-making. For example, AI systems can analyze application performance metrics in real time and adjust the deployment strategy accordingly. If an application update is causing degraded performance, AI can roll back the update automatically, reallocate resources, or fine-tune parameters to ensure smooth deployment [10].

DevOps emphasizes the importance of feedback loops for continuous improvement. AI can enhance these feedback loops by continuously monitoring metrics such as user engagement, system performance, and operational costs. AI models can then provide actionable insights, allowing DevOps teams to iterate on their processes faster and more effectively [11].

Infrastructure-as-code (IaC) is a fundamental principle of modern DevOps practices, allowing teams to define, manage, and provision infrastructure using code. AI enhances IaC by automating infrastructure provisioning, optimizing resource configurations, and ensuring that infrastructure is both cost-effective and scalable.

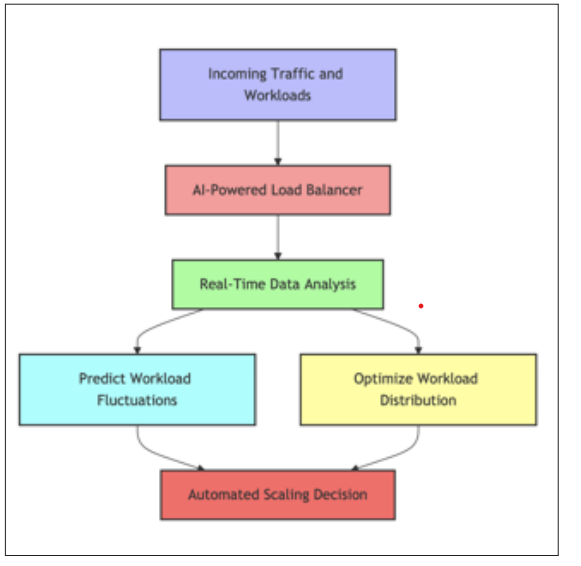

Figure 4: AI-Powered Load Balancing and Automated Scaling

AI can automatically generate IaC templates based on system requirements, resource usage, and predefined performance objectives. For instance, machine learning models can predict the optimal infrastructure configuration based on historical performance metrics and generate IaC scripts to provision those resources dynamically. This reduces the need for manual configuration and helps teams deploy more reliable infrastructure faster.

In DevOps environments, enforcing infrastructure policies (e.g., security, compliance, cost limits) can be challenging as systems scale. AI-powered policy engines can monitor IaC deployments in real time to ensure compliance with organizational policies. For example, AI can detect when a provisioned resource exceeds budgeted cost limits or violates security policies and automatically take corrective actions, such as shutting down unauthorized instances or reconfiguring network settings. Additionally, AI can track compliance with evolving regulations, adjust configurations to meet audit requirements, and predict potential non-compliance risks based on historical data. This level of automation ensures that infrastructure management remains secure and cost-efficient even as the environment scales dynamically, reducing manual intervention and minimizing human errors.

While AI significantly enhances DevOps capabilities, its integration comes with several challenges and limitations:

The success of AI in DevOps relies heavily on the availability of high-quality data. Inaccurate, incomplete, or biased data can lead to poor model performance and incorrect predictions. Ensuring robust data pipelines and continuously updating AI models with accurate data is essential for maintaining AI-driven infrastructure management.

AI introduces new security vulnerabilities, such as adversarial attacks, where malicious actors manipulate input data to deceive AI systems. For example, attackers could alter data logs to trigger incorrect scaling decisions, potentially leading to service disruptions or data breaches. Securing AI systems, especially those that manage critical infrastructure, is a top priority.

Deep learning and reinforcement learning models, while powerful, are often complex and difficult to interpret. This "black box" nature can make it challenging for DevOps teams to understand why certain decisions are made, particularly in high-stakes environments. Explainable AI (XAI) techniques are essential for improving transparency and building trust in AI-driven systems.

Figure 5: AI Feedback Loop in DevOps

The integration of AI into DevOps represents a significant step forward in the automation, scalability, and efficiency of modern cloud-native environments. AI technologies—such as machine learning, deep learning, and reinforcement learning— empower DevOps teams to make intelligent decisions in real-time, optimize resource allocation, and streamline CI/CD pipelines. While challenges related to data quality, security, and AI model complexity remain, ongoing advancements in AI research and DevOps practices will address these limitations, paving the way for more resilient, scalable, and autonomous infrastructure management systems.As organizations continue to adopt AI-driven DevOps frameworks, the future of infrastructure management will be defined by intelligent automation, real-time optimization, and seamless scalability—enabling businesses to innovate faster while maintaining high levels of reliability and cost-efficiency.