Author(s): <p>Srujana Manigonda</p>

The dynamic and fast-paced nature of financial markets necessitates real-time data processing and integration to support timely and accurate decisionmaking. This paper explores next-generation data integration pipelines tailored for real-time financial market analytics. These pipelines leverage modern data engineering technologies such as Apache Spark, cloud-based data lakes, and event-driven architectures to ingest, process, and analyze massive data streams from various financial data sources. The integration framework emphasizes low-latency data processing, scalable infrastructure, and robust data governance to ensure data accuracy and compliance. Key focus areas include data normalization, feature engineering, real-time analytics for trading decisions, and dashboard-driven reporting for market insights. The proposed system demonstrates how seamless data integration can empower financial institutions with predictive insights, enhanced risk management, and improved customer targeting, ultimately driving profitability and competitive advantage in the digital economy.

The financial market landscape has evolved dramatically with the rise of digital trading platforms, algorithmic trading, and data-driven decision-making. In this environment, the ability to process and analyze massive volumes of real-time data is essential for maintaining a competitive edge. Data integration pipelines serve as the backbone of this capability, enabling seamless data flow from diverse sources to analytics platforms.

Next-generation data integration pipelines address key challenges such as data heterogeneity, high data velocity, and stringent compliance requirements. They combine cloud-based data lakes, big data technologies like Apache Spark, and real-time streaming frameworks to ensure that financial institutions can process, transform, and analyze data with minimal latency. Advanced pipelines also integrate machine learning models and predictive analytics to derive actionable insights from market data, driving smarter investment strategies, improved risk management, and personalized customer experiences.

This paper explores the design, implementation, and impact of modern data integration pipelines tailored for real-time financial market analytics. It highlights technological advancements, best practices in data governance, and innovative solutions that enable financial institutions to thrive in a highly dynamic market environment.

Data integration pipelines have evolved significantly to support real-time financial market analytics, driven by advances in big data technologies, cloud computing, and artificial intelligence. Early research in data integration focused on Extract, Transform, Load (ETL) processes designed for batch processing, limiting responsiveness in real-time scenarios. Recent studies emphasize streaming data architectures that process information with minimal latency.

Key technological components enabling real-time integration include Apache Kafka for event streaming, Apache Spark for in-memory processing, and cloud services like AWS, Azure, and Google Cloud for scalable data storage and processing. These platforms support continuous data ingestion from diverse sources such as stock exchanges, financial APIs, and social media feeds.

Research also highlights the importance of data quality and governance. Real-time pipelines must ensure accuracy, consistency, and compliance with regulatory standards like GDPR and financial regulations. Techniques such as data deduplication, normalization, and real-time validation are widely discussed in the literature.

Machine learning integration has emerged as a crucial factor. Studies show that predictive analytics and AI models embedded within pipelines can detect anomalies, forecast market trends, and enable algorithmic trading. The role of predictive modeling frameworks like TensorFlow and PyTorch in enhancing decision- making processes is frequently examined.

Additionally, visualization tools such as Tableau and Power BI facilitate actionable insights through interactive dashboards. They transform complex data streams into comprehensible analytics, aiding portfolio management and risk assessment. The literature also explores the trade-offs between on-premises and cloud-based deployments, emphasizing scalability and cost-effectiveness in cloud-based solutions.

Overall, the integration of cutting-edge technologies, adherence to data governance principles, and the ability to scale dynamically are key themes in the evolving field of data integration for financial market analytics.

A financial institution sought to enhance its data integration capabilities by migrating from a legacy platform to a new Feature Platform. The migration involved 300 key data features supporting various business functions such as digital marketing campaigns and machine learning (ML) models.

The primary goal was to modernize the data pipeline by transferring features to the new platform while maintaining high data quality, reducing processing time, and enabling better campaign targeting through real-time analytics. This includes enhancing data processing capabilities, improving data accuracy, reducing operational delays, and supporting advanced business functions such as marketing campaigns and machine learning (ML) models.

The migration from Legacy Platform to Feature Platform followed a structured methodology centered around feature development, data integration, and process automation.

Identified 300 critical features used by the Data Science and Marketing teams. Defined business rules for each feature, including customer segmentation and campaign triggers.

Developed ETL processes using PySpark, SQL, and Python to extract data from Snowflake. Each feature followed a standardized development lifecycle, ensuring scalability and maintainability.

Utilized corporate tools like Legoland for EMR cluster provisioning and Databricks for development and validation. Ensured compatibility across cloud platforms through rigorous testing.

Implemented automated data validation processes, including custom SQL queries, data quality assertions, and aggregate functions. Conducted UAT testing and compared results against source systems in HDFS.

Developed a pipeline that triggered ETL processes, monitored execution, and populated results in a Tableau dashboard. Enabled real-time monitoring of feature status and data quality.

Designed a Tableau dashboard showing feature processing status, data quality issues, and business impact metrics. Provided detailed reports for campaign performance and model accuracy.

Followed a three-week release cycle, updating JIRA tickets for task tracking and conducting periodic reviews. Adjusted features based on stakeholder feedback and performance metrics.

Conducted performance tuning by optimizing SQL queries and implementing PySpark data frame processing to reduce execution times. Automated ownership transfers and feature updates using cloud-native APIs.

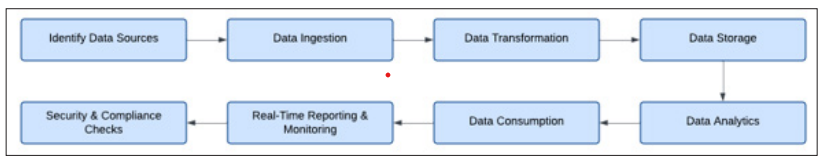

Figure 1: Data Integration Pipeline

This methodology ensured a seamless migration, enhanced data accuracy, and supported scalable real-time analytics, driving significant revenue growth through targeted marketing campaigns.

The migration from Legacy platform to Feature platform yielded significant improvements in data processing, operational efficiency, and business outcomes.

The migration from the legacy platform to the new Feature Platform (FP) demonstrated the transformative power of next- generation data integration pipelines in supporting real-time financial market analytics [1-16].

The development of next-generation data integration pipelines has proven to be a game-changer for real-time financial market analytics. By implementing scalable and efficient frameworks leveraging cloud platforms, advanced ETL processes, and real- time monitoring tools, financial institutions can process massive volumes of market data with greater accuracy and speed. These innovations enable seamless integration across diverse data sources, ensuring that financial professionals have access to actionable insights in real-time.

This approach not only enhances decision-making in volatile markets but also supports predictive analytics and machine learning applications for competitive advantage. The result is a data infrastructure that meets the demanding requirements of financial services, including transparency, compliance, and operational excellence. As financial markets continue to evolve, the adoption of these advanced pipelines positions institutions to respond rapidly, innovate continuously, and capitalize on emerging opportunities.