Author(s): <p>Udit Patel</p>

This paper explores how AI and Machine Learning is becoming a key participant in cybersecurity's emergence and constantly shifting nature. It disillusioned traditional forms of detection, such as signature-based detection and rule-based systems, with the idea that they are suitable for dealing with new complex threats like zero-day vulnerabilities, APTs, and social engineering attacks. AI and ML provide enhanced features for accurate time operation, real-time threat identification tools, anomaly identification tools, behavioral tools, and predictive analysis, and therefore, have become helpful in the modern context and central to the cybersecurity architecture of many organizations. The paper fleshes out the strengths of AI in intrusion detection, dynamic malware analysis, and phishing detection, among others. The following AI methods are covered in detail: Supervised and unsupervised learning algorithms are used in processing datasets, and autoencoders are used. Deep learning models are suitable for processing big data in real-time. The issues of AI incorporating false positive outcomes, adversarial AI, and data quality problems are also addressed, along with ethical and privacy considerations. The roles of automated incident reporting and self-learning security systems, as forecasted for AI's contributions in managing cyber threats, are designated as crucial. Finally, the paper concludes by stressing the need for organizations to adopt AI solutions for the preemptive approach in the cybersecurity domain.

The growth of dependency on information technologies has been matched by the growth of cyber threats, which puts pressure on the conventional defense-in-depth security model. With the likelihood of individuals and businesses becoming more connected, there are a lot of risks and opportunities for a bad actor to infiltrate networks, systems, and data security. Contemporary cyber threats are not standard and static; they come in the form of malware and ransomware, APTs that call for a different approach to combating them. That may be why conventional methods can no longer adapt to the new and constantly changing attacks, which require sophisticated technologies to cope with the threat. Conventionally used methodologies, including signature-based detection and conventional rule-based systems, must improve in the current context. These methods mainly rely on detecting well-known threats that match the observed activities with the previously detected problems. However, this approach is mainly contemporaneous and typically aims at guarding systems against new, unknown risks. Because targeted cyberattacks reflect more creativity, including penetration of zero-day flaws and engagement of social engineering approaches, there is more need for antecedent solutions. Traditional approaches do not work well in identifying trends and outliers that would indicate an attack is possible. However, they behave erratically and can produce false alarms that flood security teams with alerts that are not a real menace. To these challenges, AI and ML have been the solutions leading to change in the cybersecurity field. AI and ML can process more data than a human analyst or regular systems would, making the patterns and possible threats more accessible. These technologies are especially effective in training new data and emerging cybersecurity threats, identifying anomalous patterns, proactively providing descriptions and predictions, and triggering countermeasures. Therefore, technology systems such as AI and ML are being incorporated into the cybersecurity models to enhance the ability to combat an increasingly diverse threat profile.

Figure 1: Anomalous Behavior- Faster Capital

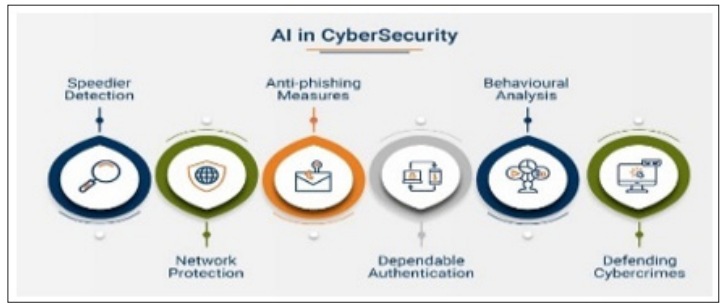

This article aims to identify and discuss avenues for improvement of the current and future cybersphere by utilizing AI and ML. It will go through anomaly identification, behavioral analysis, and auto-security response systems. Also, the article will focus on the opportunities and risks of adopting AI and ML in the contexts of cybersecurity initiatives and architectures, such as problems with data in AI and ML models, volume of false positives, and adversarial samples. Therefore, this article will offer information about the present and the foreseeable development of AI-based cybersecurity systems and the opportunities for the future transformation of the antisecurity threat systems. One of the most critical applications of AI and ML in cybersecurity is their potential to deliver real-time protection from threats. A real-time system can receive large volumes of data from a network and analyze the risks simultaneously, thereby containing cybercrimes [1]. These approaches effectively decrease the time of vulnerability, as traditional methods of safeguarding require a timely response after a breach has been realized. The use of AI and ML positively impacts cybersecurity systems because it makes them more sophisticated to match the rapid development of the threat.

One of the significant uses of AI in cybersecurity is Anomaly detection, where algorithms are programmed to detect unusual behavior that could indicate an attacker. AI-driven anomaly detection is more efficient than conventional techniques because the latter cannot handle complex threats such as insider threats or zero-day vulnerabilities—several algorithms in this regard, including SVM, Autoencoders, and Isolation forests. One-class SVM is a form of supervised learning that uses a boundary around average data points to label anything beyond that boundary as an anomaly. This technique is ideal within a context, revealing normality stream easier than all possible attack patterns.

Conversely, an autoencoder is a kind of unsupervised learning neural networks that aims to encode input data into some feature space with less dimensionality and then decode it. When the reconstruction error is high, the input is an anomaly. Isolation Forests function in an isolation of the anomalies by random segmentation of the data points [2]. Finding anomalies is simpler than locating average points, which makes this approach very effective for datasets of immense size. AI algorithms can handle high traffic levels in a network or logs in real-time and then learn and detect variations expected from an attack. For example, by analyzing logs of login times, access requests, or data transfer rates, AI can identify patterns that can be marked as suspicious, such as hacked accounts or ongoing cyber-attacks. Those unusual conditions are viewed as deviations, making AI even more valuable since it can process numerous data and identify deviations that have not previously been considered as potential threats.

Figure 2: AI in Cybersecurity: Revolutionizing Threat Detection

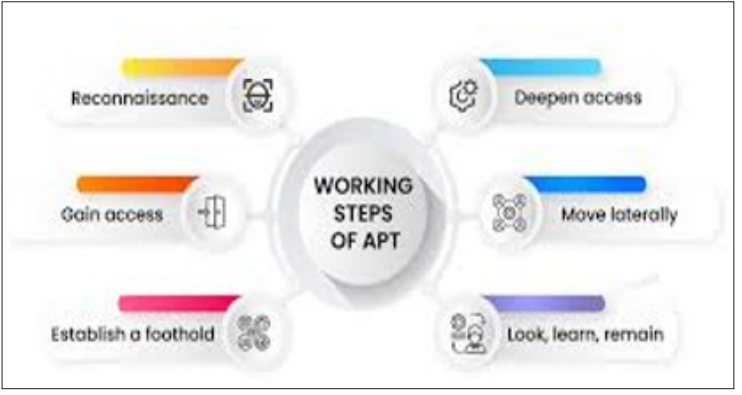

Behavioral analytics, on the other hand, is closely linked to the use of AI and centers on observing and analyzing the behavior of entities/users over time for any anomalies that may threaten the system. With behavioral models, AI can determine anomalies of regular activity from the user, which aids in identifying individuals who are compromised or have malware or malicious users internally. AI-based behavioral models employ algorithms to develop the profile of activities associated with normal system/ user behavior [3]. This baseline is renewed with new data, assuring the system can detect abnormal behavior patterns in time. For instance, the system would get suspicious if a user regularly opens only financial reports and suddenly downloads files not linked to his position. Behavioral analytics is a perfect way of dealing with a problem such as Advanced Persistent Threats (APTs), where attackers work anonymously in a system with elevated access rights and move in a system gradually from one stage to another without being detected while siphoning off critical data. Differences, including minor changes in behavior like changes in how files are accessed and standard communication patterns between systems, can all be detected by AI models. Every time users interact with applications, AI can assist organizations in identifying and responding to risks before they endanger the company.

Figure 3: Advanced Persistent Threat (APT)

Holistic detection has been the traditional process of detecting threats using signed patterns of the malware in question. Nonetheless, the contrast-based technique is gradually losing its usefulness due to the fast-changing nature of malware and the widespread zero-day attacks. AI improves other methods under signature-based detection by using machine learning algorithms to detect new malware signatures that may not have been previously detected. Traditional signature-based systems are prone to frequent updating as they can only identify those threats with known signatures (Nyati, 2018). On the other hand, AI can learn the behavior and shape of malware, compare it with known threats and infections, and potentially recognize the threat, even when it has no specific, unique signature. Neural networks, for example, enable AI to learn from an array of large malware datasets and enhance its function of identifying new strains of existing threats and fresh, distinct strains [4]. For example, deep learning models that analyze code structure and behavior of files can correctly sort them into "good" and "bad" without prior exposure to the files. This necessarily makes the AI-based detection systems more versatile and capable of protecting novelties without updating the signatures.

Machine learning is a part of AI that is useful in improving cybersecurity because deep learning can work with huge volumes of data containing elements that are sometimes hard to decipher by other methods. CNNs and RNNs are deep learning models used in different cybersecurity areas, such as network security, facial recognition in surveillance, and phishing email detection. In principles of network safety, a deep learning solution can predict traffic data to discover signs of cyber-attacks [5]. A series of seemingly unrelated models can effectively detect more complex attacks, for example, DDoS, by using traffic analysis where standard traffic patterns bear little relation to conventional detection systems. Deep learning is also integrated into IDS or intrusion detection systems to improve the identification of zero- day vulnerabilities by categorizing unknown network activity as malicious.

Figure 4: Deep Learning: Models, Enterprise Applications, Benefits, Use Cases, Implementation and Development

Another area of intense interest in deep learning is the field of Phishing Detection. Phishing emails are processed using an NLP technique in AI to identify the message contents relevant to the scams. These approaches can also be employed with deep learning models when trained on vast arrays of phishing emails featuring the alluring words and phrases usually used when establishing phishing scams. However, deep learning models are used effectively in facial recognition systems, especially security and surveillance systems [6]. These systems can provide real-time surveillance of video clips in order to track intruders or people with security dangers. Using new data, deep learning models can provide security solutions with a continuously enhancing capacity that, in turn, enhances security measures.

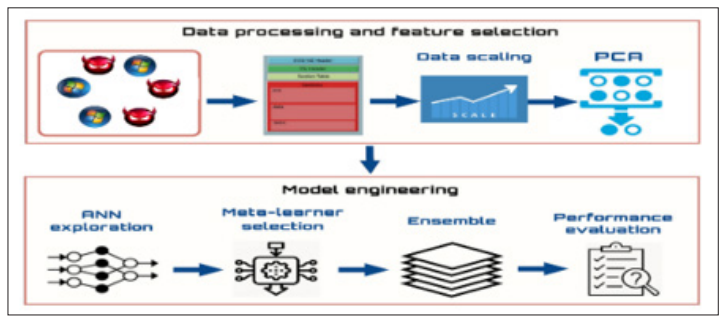

Dynamic analysis focuses more on the natural functioning of AI and ML models of the analyzed program in a sandboxed environment with infection detection and behavior analysis. In these environments, malware is run in a contained context that lets AI algorithms study the behavior of malware regarding system resources and other factors such as network configuration and software components. By linking particular activities with malware, including illicit database access and irregular file executions, AI can assess whether a data or program file threatens an installation (Wu et al, 2020). Dynamic analysis has high accuracy when detecting new or unknown malware types mainly because it relies on behavior instead of a specific signature. Thus, other dynamic analysis models, such as a neural network or decision tree, can indicate how the malware may transform. For example, those who write malware will change the code to avoid detection from signs or markers left by other programs. In response, ML models leverage new behavior patterns to change their threat detection criteria and to become even better at recognizing and managing new threats [7]. These techniques are helpful when identifying attacks that have not been previously seen, known as zero-day attacks.

Figure 5: Ensemble-Based Classification using Neural Networks and Machine Learning Models for Windows PE Malware Detection

It is crucial to follow its development, as predictive analytics adds a proactive approach to the organization's cybersecurity plans. AP II AI can predict future threats and risks in cyberspace through historical records. The time series analysis and regression models are used to forecast the models, patterns, and trends from previous cyberattacks. These predictions assist organizations in preparing adequately in advance and reducing threats where they are still in their infancy [8]. For instance, AI can review the possibility, frequency, and target system to secure the chance of a particular attack, like ransomware or phishing. The aspects of AI help various cybersecurity teams prioritize patches, updates, and security enhancements, ensuring a possible decrease in the attack surface. Preventing threats usually enables organizations to prevent disruptions, financial losses, and data breaches.

One of the most potent ways AI has been mainly applied in cybersecurity is by automating threat responses. Dedicated security systems are typically very dependent on a security operator to take an action, which takes much more time and may keep the required window of vulnerability open sufficiently long. The AI can triangulate advanced persistent threats, and the primary responses to threats include quarantining infected endpoints, blocking hostile IP addresses, or running through set security scripts [9]. In one way or another, using AI systems decreases the time it takes to counter threats, lessening the total consequence of cyber-attacks. For instance, an AI system can recognize that a particular network behavior is anomalous, freeze the node involved, and notify the security team within a few seconds. It also dramatically increases the chances of stopping future attacks and minimizes their impact on the organization. Furthermore, AI systems can perform complex consecutive operations much quicker than human operators, thus guaranteeing that even-level attacks will be contained shortly.

AI is good at handling large volumes of data in real-time and thus can be used to evaluate network traffic, users, and system logs constantly. Information from this is utilized to recognize patterns and other related threat intelligence bases for potential attacks. Real-time threat detection means organizations can take action instantly, preventing attacks from escalating to comprehensive breakthroughs [10]. Other subcategories include real-time detection, which mainly uses artificial intelligence to adjust to new threats dynamically. While traditional systems store rules within the system and then use pre-determined entries to make a decision, AI models adapt to the new incoming data over time. Therefore, AI systems can modify the detection algorithms as and when new threats surface in the global environment. This is important because, in today's world, hackers are constantly finding new ways of infiltrating an organization.

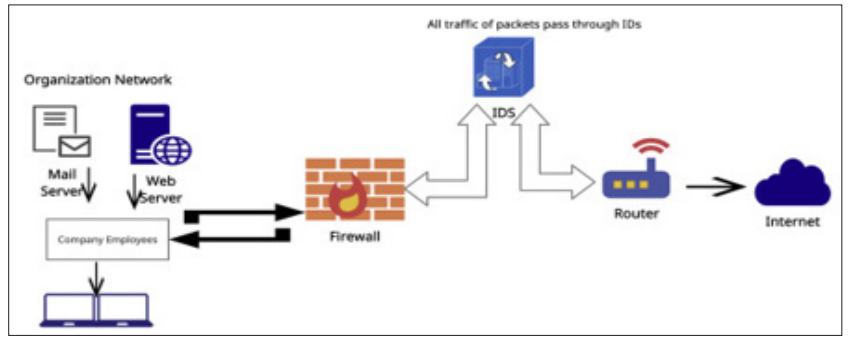

Intrusion Detection Systems (IDS) are essential for analyzing network traffic and searching for intrusions. While traditional IDS rely highly on pre-set rules and signatures, AI IDS are much more flexible and adaptive. Other categories of AI models, such as clustering models and support vector machines, can identify new threats by following abnormal behavior in network traffic. IDS that use artificial intelligence continue to learn from the data they analyze, thereby enhancing efficiency in detecting new intrusions and, at the same time, decreasing the number of false alarms. This is unlike traditional systems that may not detect new types of attacks since they were programmed based on the signature of an attack. Additionally, the IDS based on AI can distinguish various non-critical fluctuations, like increases in traffic and critical activities, giving a clearer vision of threats [11].

Figure 6: Network Intrusion Detection System - an overview

Natural Language Processing and its use in Email Filtering Phishing is one of the oldest and most common types of cyberattacks, where a bad actor manipulates a victim into providing information or executing a malicious file in an email. AI, more specifically NLP, improves the capability of the current email filter by analyzing the content and context of the emails for suspicious phishing attempts. NLP models are trained to look at patterns that may indicate suspicious activity, the URLs used, the type of language used, or even the sender details [12]. It offers AI models that can identify between a genuine email and a phishing attempt more accurately than current filters. Whereas standard filters concern themselves with the email headers alone or simple text analysis, NLP systems scan the actual text of the body of the mail as well as even other data or file attachments to distinguish between actual phishing emails, which are usually beyond the reach of usual filters. This assists various organizations in preventing their employees or customers from being tricked into falling prey to issues related to phishing.

Figure 7: Natural Language Processing: A comprehensive overview

Enhanced Accuracy of Threat Detection

AI and ML have advanced threat detection in cybersecurity by enhancing the possibility of correct notifications and reducing positive and negative mistakes. Most of the traditional approaches to cybersecurity threat detection involve analyzing a specific set of patterns or rules, which may need to be more effective in the face of today's dynamic and sophisticated cyber threats. AI and ML, however, are productive in doing several accurate analyses, which would help identify minute changes from the usual pattern that may symbolize a threat. Historical data shows that these technologies can enhance detection rates and, in the process, minimize false positives, which are likely to overwhelm a security team with non-real threats. Furthermore, they also reduce false negatives, so many malicious activities go unnoticed compared to other systems.

Time-critical tasks carried out through AI and ML provide a much faster response to cyber-security threats. In many traditional cybersecurity systems, threat identification and response can take a long time – they might require human action. Threat detection and subsequent reactions can be fully realized and handled through AI- driven systems so that an organization responds to threats within milliseconds [13]. For example, suppose a particular activity is considered malicious. In that case, an AI system can take some action, such as disconnecting the networks or systems related to the threats, blocklisting specific IP addresses, and executing preprogrammed security policies.

Vulnerability management will increase by improving the ability of the Department of Defense to monitor and adjust to new and planned attacks. AI and ML are at the center of vulnerability management, assisting organizations in deciding which vulnerabilities to target first and even forecasting potential future vulnerabilities. Given the possible threats in large and multilayered IT systems, it is impractical to ask cybersecurity teams to address each. AI models can utilize big data of known vulnerabilities, exploit patterns, and threat intelligence feeds about vulnerabilities and feeds to determine which of these vulnerabilities are likely to be exploited. It also enables security teams to prioritize risk because the (security)s cannot fix all the issues simultaneously.

Figure 8: AI in Vulnerability Management

Cyber threats are numerous, and with technological advancement, hackers often change their strategies to compromise known security measures successfully. These and other AI and ML models are chosen because they can learn from every piece of data introduced to them so that the presented data summarizes their performance over time. That flexibility enables AI-driven cybersecurity to remain functional, even as threat vectors change over time. Strengthening learning and transfer learning are methods that give AI systems different capabilities of learning new patterns of attack and related strategies as a new process without requiring a programmer to program it [14]. This makes it possible for data- oriented security systems with support from artificial intelligence to note new kinds of threats, such as new forms of malware or phishing attacks, faster than incumbent systems. Additionally, it establishes ongoing learning so that the AI systems improve with time in separating good actions from bad ones, decreasing false positives and negatives.

These are cases when regular traffic is considered malicious, while security specialists dealing with alert fatigue receive much useless information. On the other hand, scenarios where the system does not identify a real threat are known as false negatives that can result in a security vulnerability. Approved in advance by These inaccuracies can significantly impair the functioning of cybersecurity operations by either making analysts increasingly apathetic to alerts or letting cyberattacks enter the system unnoticed. In order to avoid the presence of many false positives and negatives, it is necessary to bring AI/ML models closer to an optimal state [15]. This can be done by tweaking the alert levels and feeding new data to the models more frequently to increase their effectiveness. The security teams should practice supervised learning techniques with models trained on labeled datasets. Further, unsupervised learning is applicable in identifying new risks because the data input the models perform the analysis on can contain risks that have been missed during data preparation. Using both methods will increase the accuracy of results, and no real threats will be missed while eliminating false alarms.

Figure 9: Ai Model Security: Concerns, Best Practices and Techniques

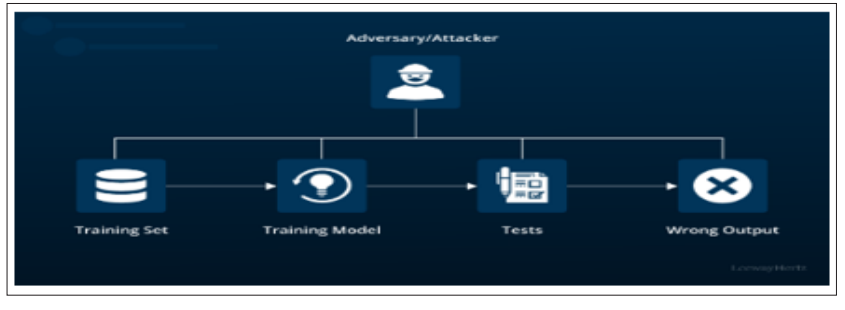

Adversarial AI threatens cybersecurity systems because AI naturally adapts to its surroundings. Since inputs determine the kind of predictions made, attackers can alter them slightly; the AI will give improper output. These adversarial attacks take advantage of the flaws in machine learning algorithms and thus can misclassify new traffic as either malicious or benign. This manipulation may lead to AI-deployed cybersecurity measures being completely compromised to allow intrusion. In order to control adversarial AI threats that AI models might induce, cybersecurity frameworks have to contain strict defensive measures. One type of defense approach is adversarial training, in which models are trained on the input containing clean inputs and adversarial examples. This makes it possible for the model to understand what accurate data looks like and how manipulated data looks like. In addition, the model must be validated and monitored on an ongoing basis to determine whether any variation suggests an adversarial attack. Security teams should also adopt robust AI frameworks that include cases that use techniques like ensemble learning, where several algorithms collaborate to offer accurate results that the attackers cannot manipulate [16].

The role of AI and ML in cybersecurity is highly dependent on the availability and quality of the data. For training models to correctly identify malicious activity, high-quality labeled data must be provided. It is rare to get such data. In cybersecurity, only some data can be structured or well-defined, meaning that this can be challenging even when trying to train models of acceptable effectiveness [17]. Furthermore, it must be stated that information necessary for recognizing some threats may only sometimes be disclosed, as new cyber threats emerge rapidly and often hardly leave any traces. Therefore, organizations must implement proper data collection and preprocessing strategies to overcome these challenges. These pipelines should clean, label, and format the data for fine training and deployment of AI/ML models. Where labeled data is limited, various approaches like unsupervised or semi-supervised learning can be used to identify patterns in the unlabeled data. Another approach can be synthetic data generation, where generated and artificial data are used as additional information for machine learning datasets and can be used to enhance machine learning models and their accuracies.

Today, information computer personnel and cyber attackers seek weak links in the cybersecurity protective layers and find new TTPs. Therefore, if an AI/ML model is trained on the daily updated dataset, the models can degrade slowly over time. Of all these threats, zero-day vulnerabilities remain hard to identify using AI systems because they are created by taking advantage of the unknown flaws in both software and hardware. Due to the constantly evolving nature of various threats present in cyberspace, the AI/ML models need to be periodically updated [18]. Transfer learning is used to keep the models responsive, which involves using a particular model trained for a task in another similar task. This enables the system to respond to new threats provided by the knowledge base to give efficient output. Also, reinforcement can be employed to design models that adapt over time to the environment, enhancing the new and emerging threats. Auto- retraining scripts should also be required to retrain these models when the latest threat data is acquired.

Some of the issues arising as businesses and other companies begin to integrate artificial intelligence (AI) and machine learning (ML) for cybersecurity are. However, AI/ML systems have pros and cons; to get the best from them, the following best practices must be observed. Out of the above best practices, the first five are related to human interaction with the AI system, understanding of the model, AIS integration to the current systems, and limitation considerations.

AI systems are very efficient at handling a large amount of data and drawing conclusions from the data provided; however, they could be better. Cyber defense needs situational awareness that is best done with context, and AI does not possess context or instinct [19]. For instance, we have an AI system that identifies speculative logins, but the distinction between a genuine attempt to log in and a possible form of attack requires human input. Due to this challenge, organizations should ensure that AI works closely with human analysts who will help analyze the findings. With the ability to work with big data and automate processes, AI systems can significantly assist security teams by taking more straightforward tasks away from them and letting them focus on significant picture issues. When combined with human-derived contextual analysis, the results of AI data processing can increase an organization's cybersecurity level. Another critical factor in this collaboration is the readiness of security teams to work with AI systems because people must understand what the AI provides them.

The interpretability of the AI models is the other challenge that hampers cybersecurity efforts, as suggested by Ni et al. It should be noted that most AI systems, intense learning ones, seem to be "black boxes," and it is challenging to explain the criteria according to which the AI arrived at the produced decision. This lack of fear is a problem in cybersecurity, mainly when behaviors should be explained to the investing public and other stakeholders [20]. The security team requires the AI's recommendations to be trustworthy and rely on them. However, this trust is protocol- structured and can diminish when deducing AI recommendations needs to be more comprehensible. The use of Explainable AI (XAI) approaches has to be seen as a critical priority. These ways are essential in providing AI models explainability by presenting the decisions from distinct steps. For instance, methods like decision trees, sheer scoring, Shapley Additive Explanations, and Local Interpretable Model-agnostic Explanations will explain which inputs into the AI dictate the predictions. Achieving this level of transparency is very significant to cyber-security personnel since it strengthens their confidence in the use of AI systems and also facilitates the enhancement of their decision-making process.

Figure 10: Explainable AI: How it Works & Why you can't do AI without it

Most companies already use traditional security products, including firewalls, IDS, and SIEM systems. When adopting AI, it is essential to map the strategies to existing tools so that new AI solutions can integrate with previous solutions. Another good suggestion for the AI-based integration is to do it step by step. Instead of acting as the new generation of security systems, AI can work as an additional layer to augment existing tools. For instance, AI can be integrated to work with the current SIEM system in an organization to enhance the ability of the system to identify and combat possible threats. Furthermore, the system's output has to complement existing tools to avoid corrupting or distorting the data. The guidelines for transferring information between the integrated AI and traditional systems must be set for proper integration.

In many cases, getting the resources for implementing AI is challenging for most organizations but even more challenging for smaller organizations. AI/ML application model updating, training, and creating are resource-intensive processes regarding computational power, storage, and human capital. These constraints imply that even though small firms are also at risk of cyber- attacks, the kind of AI technologies are not within their reach. The problem is that AI runs into difficulty when paired with large data sets, a difficulty known as the "half-life of AI." One approach to this conundrum is to use cloud-based AI services. Combining fee-based and product-based services, cloud providers offer AI and ML as integrated services delivered via their IaaS solutions [21]. They help organizations include the latest AI technologies in their competencies without investing in expensive hardware and hiring unique staff qualified in modern ML techniques. Small organizations are in a position to leverage the establishments generally available with AI-based cloud solutions for cybersecurity in a cost-efficient manner.

The second strategy is to engage external service providers in AI- related services. These providers can provide turnkey solutions that may be fine-tuned to ensure they fit the respective needs of an organization. This reduces the necessity for businesses to invest in an in-house alternative AI model and means that even more compact enterprises can exploit a skinny AI cybersecurity platform without having to establish expensive software and model construction and upkeep [22].

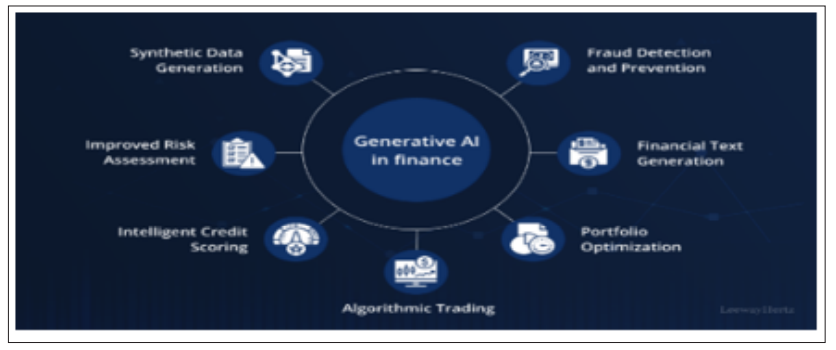

AI and ML have transformed sectors by improving security systems, checking fraud activities, and protecting vital data. In this section, various AI and ML programs across the three key sectors of retail, health, and financial services will be discussed to show how these technologies enhance the strength of cybersecurity.

The retail industry remains a popular target since it involves numerous consumer data sets that companies process daily. AI and ML implementation have brought improved frameworks to manage such risks, including fraud detection. Using AI, several fraud detection systems based on customer behavior studies detect suspicious behavior with high chances of fraudulent transactions. Using machine learning, this type of fraud at retail businesses can be identified well in advance, and the threats to customers' accounts can be prevented, thus reducing the monetary losses to both the business and the customer. AI contributes significantly to customer protection by analyzing purchase procedures and finding potential forgery breaches or security risks [23]. For instance, AI systems can identify one or many excessive and suspicious purchases, such as a more significant number of costly purchases, within a short period. They then can block or freeze the account for further confirmation. Such measures ensure that risks are detected and managed before getting into full-blown breaches in retail businesses. AI optimization is also central to fraud detection, aside from generating sales through recommendation systems. It can recognize patterns of normal customer behavior and differentiate regular customer activity from abnormal activity that may indicate account compromise. Through constant feedforward and feedback loop analysis of consumers' purchasing patterns, showroom AI enhances the shopping experience by improving the clients' security as they shop.

It has realized that the use of AI and ML in healthcare has drastically improved the sector's cybersecurity. Medical data is susceptible and comprises patient records, health test results, and even treatment history; its protection is critical. AI and ML are used to improve the security of information, reduce cases of breaches, and protect sensitive medical data. For instance, big data analytics is used to scan numerous datasets of patient data and identify any deviations as to who accesses patients' data to rule out unauthorized use of information from medical records. Another important application of AI in healthcare is the use of predictive analytics, specifically the health and security of the patient [24]. AI can, for example, help healthcare providers identify potential health risks to their patients based on the number-crunching the patient data offers. Furthermore, these predictive models can detect abnormalities in patient care processes, describing when a patient is subjected to delays in accessing patient data or even patient records, which indicates that the healthcare provider has experienced a cyber- attack. This protects the patient's medical data, keeps the systems running, and prevents any halt to patient treatment. They are also applied in imaging technologies to improve security by protecting stored digital health information and preventing leakage. For instance, AI can track access to patients' images or recordings that deserve protection and alert anyone when they try to alter or share data. With the help of AI, healthcare cybersecurity policies may be implemented successfully, and therefore, facilities can provide a safer environment for patients and employees.

Figure 11: Generative AI in Healthcare is Revolutionizing Patient Care and Efficiency

Regarding AI and ML applications, financial services cannot exist without active real-time monitoring of fraud and threats. Several banks, credit unions, and financial institutions process vast amounts of customer data and thus become an easy target for hackers. Real- time transaction processing in credit card companies involves using artificial intelligence to monitor fraudulent activities – such as withdrawals, high spending volumes, or access from unusual geographical locations. These AI systems use the new transaction data stream to learn how to identify further and minimize fraud cases. Real-time fraud detection has benefited from Artificial Intelligence to embrace machine learning to alter its strategies [25]. These models can quickly determine outliers of normal transaction behavior and mark them for review. For instance, should a credit card be in operation and then used in a different country in a short period? The chain of operations is halted, and the customer is notified to confirm the transaction. They assist people in identifying fraud and scams instantly and protect their financial information.

Figure 12: Generative AI in finance and banking

Apart from fraud detection, AI-integrated systems are helpful in further protecting the sector by evaluating and anticipating cyber threats. AI is also capable of identifying potential threats based on past and likelihood of an attack and responding with countermeasures before an attack happens. Given that cybercriminals are learning from experience, he stated that artificial intelligence is ideal for modeling real-time security in finance systems. ML also helps consumers and financial sector firms meet legal compliance standards [26]. Strict laws like GDPR also govern established bodies; with the natural assistance of AI systems, all processes and transactions would go under legal scrutiny. Suppose there are any violations of the set regulatory measures. In that case, machine learning models can identify these violations to ensure that financial institutions are on the right side of the law regarding compliance and safe operations without expensive fines.

Ethical and Privacy Considerations in AI-based Cybersecurity AI ML in cybersecurity is valuable in the following ways: threat identification, detection, and response. However, it also brings significant ethical/privacy issues that must be addressed to avoid or minimize the misuse of such products. This section explores two primary considerations: privacy of data, adherence to the national laws and regulations governing the work of an AI model, and the necessity to eliminate prejudiced choices of an algorithm.

Figure 13: Ethical Management of Artificial Intelligence

To address the issue of AI cybersecurity VMLC, as organizations continue to adopt traditional AI-based cybersecurity measures, they need to ensure that the AI model they use meets legally binding data protection regulations such as GDPR and CCPA. The GDPR is another data protection law established in 2018 to standardize persons' personal data collection in the EU member states (European Commission, 2021). It highlights specific aspects such as consent, visibility, and the right to oblivion and requires that organizations guarantee customers' data security. Moreover, in the case of AI and cybersecurity, GDPR compliance implies that AI systems cannot violate individuals' rights [27]. For instance, AI algorithms that determine user behavior for anomaly detection must achieve this without infringing on the user's data privacy. According to the privacy by design concepts, privacy protection measures should also be designed into the AI system at the initial design phase of the organization. This approach makes it easier to manage data leakage and misuse risks

Bias can be from sources such as data used in developing models, the choice of the algorithms, and preconceived notions made during model making. Bias in data means that if AI systems act as jurors, then those systems are likely to create unfair or discriminative justice that will entrap users who did not deserve such treatment or disproportionately scrutinize certain users. Due to the use of bias, organizations need to develop measures that prevent discrimination in the models used by AI systems. This includes incorporating diversified and recommended data sets during the models' training. This suggests that failure to incorporate diverse perceptions regarding demographics and scenes during training can lead to a high risk of bias in the AI results. Further, a regular check and balance of the AI systems should be carried out to detect any emerging biases in legalities and then address them. One crucial element is that the process must be more transparent to eliminate prejudice. Organizations need to work towards explicating and explaining decisions when using AI-enabled systems. This shows that applying several explainable AI approaches makes it possible to determine objectivity in how AI makes decisions and where bias might lie.

The problem with zero-day vulnerabilities is contentious because there are holes in software before the developer can fix them. Innovative applications of AI in this area are yet to be realized by employing machine learning techniques that would help scan large data sets for risks that cybercriminals might exploits cybercriminals might exploit. For example, new AI tactics are being adopted, drawn from behavior analysis and predictive modeling, to predict the risks assessed from previous attacks. Even in cases where no change logs exist, or the threats are not reported, usage data is gathered by the AI systems, coupled with intelligence data, to build models that provide insights into zero-day vulnerabilities that may exist within software systems. Besides, this approach reduces the time frame within which the attackers are likely to act and improves the security status of organizations. Moreover, AI can help adjust policies and measures to a more real-time concept with the help of threat maps and results [28]. Over time, AI models will continue to update the predictive building blocks, and organizations will be able to actively guard against the risks associated with zero-day vulnerabilities, substantially improving their standing against cyber threats.

Given continued developments and varieties of cyber risks and attacks, speed and efficiency of response have often been critical. Appropriate tools developed through AI technology make it possible to understand threats actively and respond to them in line with previous programs set by the program. It makes response faster and frees up time for the security teams to concentrate on higher value-addition activities. Looking to the future, one can also forecast that the application of the blend of responses and learning patterns will be developed along with the existing AI techniques [29]. These systems will be making use of data that relate to past incidents in order to fine-tune their response strategies in perpetuity. For instance, AI can analyze prior attack patterns to determine which response measures drew maximum results, thus making organizations future-proofing strategies in handling such circumstances. This change will be beneficial in improving operational efficiency and increasing the efficiency of threat management countermeasures.

Advanced security technologies based on artificial intelligence will create new security systems, such as self-learning systems capable of identifying threats, responding to them, and eliminating them independently and in real-time. Due to the growing popularity of cloud services and IoT devices, the need for cybersecurity solutions capable of functioning in different environments supported by artificial intelligence will be high. Furthermore, continued progress in artificial intelligence will create ever-strengthening security environments regarding cooperation [30]. Security professionals will learn and chat about growing threat intelligence and apply unity gain to improve the earlier situation. For example, AI systems may collect data from IoT devices such as personal computers and cloud services to make a combined picture of the threat. Such cooperation will allow, for example, organizations to meet potential weaknesses ahead of time and optimize their security efforts.

The conclusion re-emphasizes that AI and ML have disrupted how organizations engage with and respond to cybersecurity threats. In addition to relatively subtle variations in recognizing threats, AI and ML change how responses are initiated as well, due to the incorporation of algorithms on the one hand and real- time data analysis on the other. The traditional approaches to cybersecurity based on detection, such as signature, can no longer cope with emerging cyber threats such as zero-day exploits, new malware, and social engineering exploitation. Machine learning and AI provide customizable solutions, solutions that can learn and improve over time, and scalable solutions; all attributes are essential when dealing with the complex nature of these threats. Thus, using artificial intelligence in conjunction with supervised and unsupervised learning models helps identify the previously unnoticed attack vectors, new behaviors (unknown to attackers but in practice), and threats in real-time. Hence, deep learning models and autoencoders, for example, are able to improve the capacity to identify emerging strains of malware in a situation where no absolute reference is available. Integrating AI in processing big data is a critical aspect of scanning and defining suspicious activity, naturally leading to insider threats and APTs' early identification. Incorporation of AI in the context of predictive analytics is another step higher as it enhances predictive analytics for possible attacks to prepare organizations and change them from a purely reactive to a proactive manner. As helpful as it is to incorporate AI, there are drawbacks inherent in incorporating the technology into cybersecurity. False positives and negatives are still issues where the AI models give a wrong classification and label standard activity as an attack or vice versa. This can result in alert fatigue, where low-risk alarms, or worse, swamp security analysts, overlook real threats. Adversarial AI is another vital threat type because attackers manipulate the output of AI models, thus causing a threat to the cybersecurity system. However, the quality and accessibility of the training data set constitute another aspect that affects AI models. A good-quality labeled dataset hinders effective learning in AI systems, thus making them less effective in threat detection missions. Such adversaries must be solved through systematic strategies, like adversarial training and improvements of AI models with fresh threats.

Another essential aspect of AI for cybersecurity is ethics, with the primary decisions leading to data privacy and compliance. AI systems must also meet regulatory legal norms and standards, such as the General Data Protection Regulation (GDPR). Another issue that could result in unfairly discriminative or plain wrong decisions is bias in AI models. Pursuing these goals must be constant, the algorithms used must be explained, and the datasets coming in should be inclusive. In the future, the advancement of AI in cybersecurity seems inevitable. AI will be utilized in automatic event logging, especially when threat detection requires no input from other systems, thus significantly reducing response time. Furthermore, AI will also be highly utilized in detecting zero- day vulnerability and thus improve an organization's capacity to protect against attacks on unknown vulnerabilities of software products. Advanced intelligent security solution systems will be an integrated function that performs a self-learning mechanism to adjust the newly emerged threats, which is expected to minimize the vulnerability window.