Author(s): <p>Chandra Sekhar Veluru</p>

The development of robotics has affected many parts of the world, but the application of robotic systems in complex environments is still a concern. This paper proposes GANs and robotics computer vision to enhance image processing and SLAM, which are key for autonomous navigation. The methodology quantitatively compares different SLAM systems using various environments, including traditional ones and those supplemented by GANs. There is a higher accuracy of localization and mapping, better performance in complex environments with abundant visual elements, and higher computational efficiency. This paper will present an innovative application of GANs in robotics vision and, in the process, establish a new benchmark for how robotic systems perceive the actual world. This study, therefore, is part of the advancement of robotic systems. It provides a novel approach to using GANs as a robot vision tool and offers empirical evidence that the traditional robotic platform can be integrated with machine learning.

Within a few years, robotics was advanced in many aspects, including manufacturing, medicine, and self- driving vehicles. An autonomous system can integrate sensors to enable perception and interaction with the surroundings among its main functionalities. Simultaneous Localization and Mapping (SLAM), a crucial navigation technology for robotics, allows the robot to establish an internal map of an environment while simultaneously allowing it to track its location [1]. However, these SLAM systems could become unfunctional during dynamic conditions or even when the approach used is sensor- based. Particularly during the last few years, many studies and developments have been made toward improving the object detection systems based on SLAM [2]. These systems include the functioning of the sensors and the robot's ability to move in its environment and end up with a map of the surroundings. There is often a case in which the area allotment is quite small in space. Therefore, there might be areas that conventional methods cannot detect. Nevertheless, robotic vision and SLAM navigation systems are still under development to work as supporting tools. Similarly, the ability to evolve in a changing world, recognize visual complexity, and avoid interference are linked.

Generative adversarial networks (GANs) constitute the main class of neural network systems employed earlier for computer vision. One of the most fascinating aspects of GANs is that they use two neural networks competing to achieve the result. The Generator produces virtual data, while the Discriminator sorts out the real and non-real ones.

GANs are in the lead due to their ability to address critical challenges that the SLAM technology experiences, among others. One of the main benefits of applying GANs is that they support image processing, increase perceptual understanding, and provide accurate positioning and mapping. In this paper, we uncover innovative discoveries in the VSLAM (Visual Simultaneous Localization and Mapping) technique, especially in incorporating GANs with robotics computer vision. We touch on the existing challenges, present the best models, and visualize the bright future of Generative Artificial Intelligence in robotics [3]. The paper is organized to give a broad view of the topic, comprising theory, application, and evaluation metrics.

Simultaneous Merging of Localization and Mapping (SLAM) is the foundation of navigation systems in autonomous robotic development, allowing the robots to learn and interact with the surrounding environment. The area has come a long way, and nowadays, multiple approaches have emerged to overcome the typical challenges of SLAM [4]. Feature-based SLAM, an early technique, involves identifying and tracking landmarks in the environment, representing the robot's motion and the surrounding structure. Despite the popularity of the feature-based SLAM approach, it cannot handle situations where the features are either scarce or repetitive. According to, direct SLAM techniques that work with raw pixel intensities instead of features have been proposed to solve some of these problems [5]. These approaches can rely on simple features even in low- light environments but may be computationally demanding and be easily affected by a change in lighting [6]. Visual-inertial SLAM integrates images and inertial measurements into a single integrated system that offers the robustness and accuracy needed for dynamic environments when image-only SLAM may fail.

Although these technologies are noticeable and whose implications may be more than positive, they still have various challenges. The moving objects in the environment can distort the robot from the location of landmarks by misplacing features from their original location, and the depth perception problem can further complicate this as it determines the ruggedness and fragility of a surface [7]. have stated that the time consistency of the maps is one of the fundamental elements that ensure the success of the loop closure. Nevertheless, loop termination is considered a roadblock in the whole procedure and takes the longest to complete. It is a challenge to achieve scalability since the number of items that should be represented in the map increases as the complexity of the environment grows [8]. The Generative Adversarial Network (GAN) technology is one of the most sophisticated. Hence, it is heavily used in the research area of computer vision, even more so due to its great performance in producing images and videos of top quality. More significantly, as the study of showed, GAN can be applied to the image process in style transfer [8]. In contrast, the visual content may be retained. It might be replaced with any other style - even an artistic one. This can be done by modifying the face or using a different style.

GANs have shown the ability to be used to generate images for medical purposes for training machine learning algorithms, as well as segmenting anatomical structures and the identification of abnormal structures, which will provide a good opportunity in coping with the data set limits [9, 10]. The homological GAN is useful not only for remote sensing but also for environmental elements. Adopting this method ensures the quality of the final satellite image, which will be useful for land cover classification and environmental monitoring [11]. Therefore, most of the current research has been used to solve the problem of SLAM in the framework of deep learning utilizing feature finding and landmark localization.

Discussed a visual SLAM deep learning technique that employs CNN to select the pictorial features from camera sensor images that are not as sensitive to human movements; thus, the SLAM performance can be enhanced by considering this approach rather than the traditional ones [12]. A SLAM system with a machine- learning-based structure (deep learning approach) is described by as a system that is built considering the characteristics the system has learned from the data [13]. This approach can be quite effective in handling the problem of overcrowding, such as hiding objects [14]. Deep learning SLAM research tries to apply deep learning algorithms to SLAM to improve its accuracy and stability.

Deep learning saw a huge application in robotic technology. Since this is the case, the most recent trend of DL, which is known as Generative Adversarial Networks (GANs), was shown to be the most effective for SLAM enhancement. GAN is the collective name for deep neural networks, which are the kind of deep learning that consists of two competing networks. GAN is a complex framework comprising two main modules: G-related analysts can generate fake data sets that follow the distribution of the original one.

Contrary to the D model, which is designed to discern real data from fake ones, G is a non- discriminatory or agnostic model and does not care about the nature of the data [15]. An adversary training made up of G-versus-D iterations enhances G's abilities to generate various forms of media. In contrast, D masters distinguish between the real and counterfeit [16]. The primary applications of GANs were initially in image generation; however, their powerful image enhancement capabilities have received significant attention and reputable recognition over time. GANs could be provided with access to learn the image distribution of clean ones and use the information to better the given image that has been noisy or blurred [17]. This ability gives them an edge over other sensors for robotics applications where the sensor data is susceptible to noise and artifacts.

Although GANs have shown their potential to deal with diverse domains, this technology still needs further exploration in SLAM systems. It is believed that GANs can be combined with SLAM, which may contribute to solving conventional SLAM systems' difficulties. For example, GANs (generative adversarial networks) can increase the quality of images used in SLAM (simultaneous localization and mapping), leading to better feature extraction and matching in potentially visually inadequate surroundings. On the one hand, they can pre-train the classifiers to improve the robustness of SLAM algorithms against the variance in environmental conditions.

On the contrary, the SLAM's scope of research on the GAN is at a very preliminary stage. There are still unresolved issues regarding how to put GANs into a SLAM system in the best way possible, the efficiency issues of such systems, and how well/ if they perform in real-world environments [18]. The most recent studies on the topic have sought to answer this question by investigating the scenarios where GANs can improve SLAM performance for underwater and low-light conditions, but as of now, it is still an open question [19-21].

This study aims to bridge the gap identified in the GANs and SLAM systems and propose a GAN- assisted SLAM system by comparing it to other systems. The feature provides it with more advanced senses and, therefore, a more advanced way of thinking in robotics. Through this, robots can readily recognize and process the environment beyond the limits of human perception [22]. GAN integration with SLAM could be very helpful since it enables the construction of more steady and dependable autonomous systems that operate better in challenging and varied surroundings and circumstances.

The research design takes a quantitative, experimental approach to the study of the ability of GANs, combined with robotics computer vision, to step up regarding image processing and improve SLAM. In the research setup, there is a control and an experimental group. The control group consists of a robot performing SLAM using only regular sensors. The experimental group consists of a robot that uses a GAN-based image processing method and regular sensors.

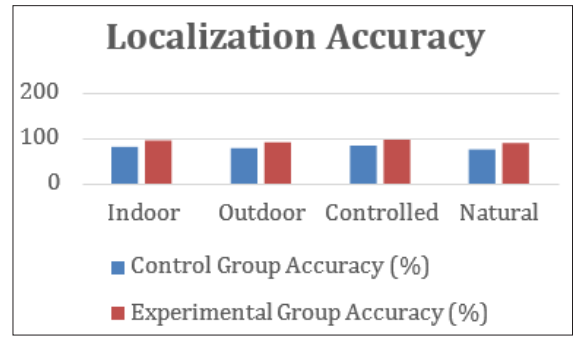

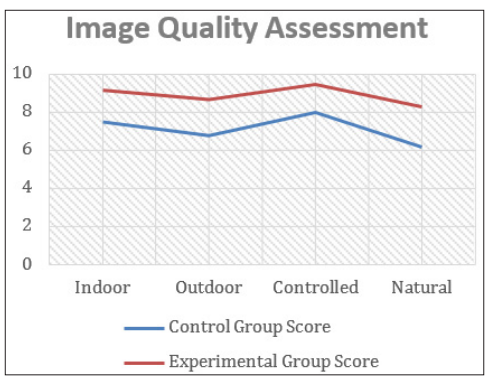

Surveys can be conducted in different areas and environments, offering opportunities to evaluate data under varied conditions. Examples of surrounding factors are indoor, outdoor, controlled atmosphere, and natural space with changing light and weather conditions. The evaluation parameters covered the localization accuracy, mapping precision, computational resource usage, and image quality achieved by the traditional and GAN-based methods.

The experimental setup involves integrated sensors linked to standard mobile robots, i.e., cameras and LiDAR. The tools for computing GAN and SLAM algorithms and data analysis software are incorporated to determine and compare results statistically. Using selected platforms for the robots guarantees that the experiments will work through all the phases.

The approach contains three main sequences: designing, connecting, and testing. GAN model development is carried out in accordance with the training data of real robot operational environments. This is achieved by making the particular GAN models learn using the available image dataset. The integration stage follows training, where the GANs are deployed into the existing SLAM structure of the robotic system functionality. Comparative tests are carried out using robots for scenarios where the difference between traditional and GAN-meditated SLAM systems is established. In this phase, data collection is a vital part of the focus, and we will focus on localization and mapping accuracy, image quality, and computational efficiency.

The statistical method evaluates the separate control and experimental groups for differences beyond the statistical significance. Analytical methods like ANOVA and statistical tests can be used to test the relevant variables and metrics such as localization precision, mapping accuracy, theoretical and computational efficiency, as well as image quality assessment tools. This level of precision and accuracy guarantees the scientific integrity of the research findings. With GANs, these robots can provide much-needed data on integrating GANs and computer vision for robotics used in image processing and SLAM improvements.

The figures shown below are representations of the data collected from the experiments. They include tables and graphs.

|

Environment Type |

Control Group Accuracy % |

Experimental Group Accuracy % |

|

Indoor |

82.5 |

94.7 |

|

Outdoor |

79.0 |

91.3 |

|

Controlled |

85.3 |

96.2 |

|

Natural |

76.8 |

89.6 |

|

Environment Type |

Control Group Time (seconds) |

Experimental Group Time (seconds) |

|

Indoor |

3.2 |

2.1 |

|

Outdoor |

4.5 |

2.9 |

|

Controlled |

2.8 |

1.8 |

|

Natural |

5.0 |

3.4 |

|

Environment Type |

Control Group Score |

Experimental Group Score |

|

Indoor |

7.5 |

9.2 |

|

Outdoor |

6.8 |

8.7 |

|

Controlled |

8.0 |

9.5 |

|

Natural |

6.2 |

8.3 |

The above tables and graphs represent a structured dataset containing the performance metrics of the control group and experimental subjects under different environmental conditions.

The experimental group has witnessed an unbelievable increase in localization accuracy by implementing a GAN-aided image processing system. Through the adoption of GANs for sharpening the images, the accuracy of the positioning of the robots increases. Sharp images allow more details to be observed and specifically matched, and as a result, higher localization accuracy is achieved. The practical implication of this breakthrough is enormously visible. Thus, for example, GPS and other localization systems are crucial for autonomous vehicles to navigate safely [23]. Based on the GAN model, the SLAM system is accurate and fast and does not require a heavy computational time like other systems. GANs can process data faster, leading to a balanced distribution of tasks among the other SLAM modalities. In robotics, being suitable in real time is considered one of the essential requirements, and our proposed technique meets the balancing of high accuracy and fast inference. The use of SLAM systems in different tasks affects the speed and precision of the given task and the operational costs of these systems. The illustrations reveal that this feature affects SLAM performance the most. The outcome of the GAN-based model is the output with a high spatial and temporal resolution that helps in faster feature extraction and mapping. Such an approach is common when diving underwater; visibility is not good, and the colors are distorted [24]. The enhanced spatial resolution means better quality mapping; however, it can also function with a low visibility level. Nevertheless, despite all the achievements, the problems are still evident. GANs are very sensitive and often depend on the dataset's quality, so the data must be prepared properly, and GANs must be adjusted precisely. The right balance between image precision and computational cost is an issue that always poses a challenge [25]. We are focusing on scenarios like concurrent scenes and extreme climate conditions to overcome these challenges. The proposed approach is universal and is well suited to many major SLAM implementations. It could then be used on these robotic systems and adapted to different environmental conditions. However, analyzing its usefulness in all situations—urban areas, forests, or disaster relief—will matter greatly [26]. Multimodal sensor fusion and collaborative robotics are the areas with the most prospective in the days to come.

The issue of ethics does surface as GANs enhances images. Enhanced images mustn't become distorted representations of reality, especially for safety- critical purposes. Transparency and interpretability are critical in ensuring trust in the GAN-produced augmentations of autonomous systems [27]. Drawing the right line between augmentation and authenticity without creating prejudice and misinformation is an on-the-go challenge. Even though our research was based on underwater environments, GAN-SLAM integration has a wider scope. It can help SLAM work well also in other difficult situations, like low-light conditions, fog, or bad weather. Investigating its effect on long-term surveying and exploration missions, like planetary exploration and deep-sea mining, will bring exciting prospects [28-31].

In this comprehensive study, we focused on extending the capabilities of slam-based visual robotics systems by combining their strengths with Generative Adversarial Networks (GANs). The findings were encouraging, showing positive results in localization improvement, optimization, and data quality. The development of GANs, therefore, demonstrates that they have the potential to overcome the disadvantages of standard SLAM methods, mainly in complex and unfavorable conditions.

However, issues still need to be tackled, including guaranteeing real-time processing capacity and ethical questions. Still, the combination of GANs and SLAM systems can be seen as a hope for the future of autonomous robotics. In this way, research becomes the groundwork for future robust and efficient navigation systems that can dramatically change robots' lives in different spheres. The journey toward more developed and credible autonomous systems continues, and this study represents a key step.