Author(s): <p>Anvesh Gunuganti</p>

Federated Learning (FL) serves as one of the groundbreaking approaches in the present society, particularly in smart mobile applications, for designing a distributed environment for clients' model training without compromising data ownership. This paper narrows down the focus to how FL emerged, how it fits in distributed systems, and its usefulness in different fields. Research findings derived from thematic analysis include FL's contribution to improving the functionality of mobile applications and managing data privacy issues. Recommendations for the actual FL application underline such aspects as data management and protection. Optimization, privacy, and novelty areas of FL are the areas for further study in the field, as per the conclusion of the study.

Federated Learning is currently a robust strategy in a world of advanced data-dependent technologies, especially when it comes to the mobile application setting [1]. FL emerged as a novel approach in machine learning, as the centralized approach to the models' training on multiple devices while maintaining the privacy of users' data. FL, in essence, allows for distributed learning across multiple parties without the need to store the raw data at a central location, hence solving key issues regarding data privacy and regulative compliance that have long been a challenge with distributed systems.

Currently, the world is experiencing information warfare, and one of the phenomena of change in machine learning model training is Federated Learning. FL stands alone and serves as the innovation format that is radically different from the traditional style of model training [2]. Compared with other strategies in which data is combined in a centrally managed database, FL enables independent learning of various devices and ensures the confidentiality of users’ data. The emerging pattern for the training process opens the way to addressing the conventional issues arising from ML models’ decentralization, such as data ownership and compliance. Because of the trait of decentralizing model training and eradicating the need for data centralization, FL creates new opportunities in the machine learning area for approaches designed to maintain clients’ data security on the same level as model flexibility and scalability [3].

Therefore, FL has the ability to unlock mobile applications and expand the potential of other industries based on the findings of the analysis, making the future focused on the introduction of innovations and setting up accountability.

The significance of Federated Learning (FL) goes beyond the technological level and reaches toward consistent innovation on the heterogeneous web of distributed computation [4]. In the context of mobile app development, which is constantly changing and evolving with a focus on data protection and device performance enhancement, FL stands as a breakthrough approach. Besides boosting the effectiveness of mobile applications, FL decentralized model training acts as a guarantee for user data protection. These twin objectives demonstrate that FL has a central function in steering the challenges of today's dispersed systems. When the question is about the protection of users' data and the chase of top efficiency is the core value, FL appears to be the perfect solution that takes into consideration both the technical needs and users' requirements. Thus, FL embodies the propensity to revolutionize how distributed systems are perceived and implemented, moving into the future of mobile app development, accompanying data protection and performance.

The objectives are numerous and broad in scope, which is appropriate for the purpose of providing an overview of the state of research at the intersection of Federated Learning (FL), mobile app performance, and data privacy. Our main research question is not only to analyze how FL improves the efficiency of mobile applications and acts as a reliable defender of users' personal data disclosure but also to define possibilities for further development of innovations in this sphere. In this paper, we strive to present a detailed analysis of major concepts and findings to explain the intricate interconnection system, the primary focal FL in the context of mobile application development, and potential general implications for distributed systems. With this analysis, we are not only trying to explicate FL's possibilities of change but also offering considerations that can be helpful for the conversation about FL's uses in the creation of mobile applications. Thus, it is intended to contribute to the creation of a clear roadmap to better decision-making and thoughtful progress in this fast- growing area, which will lay a foundation for a more solid environment for further advancements to take place.

How does Federated Learning improve mobile app performance while preserving data privacy?

The literature review on Federated Learning (FL) and its implications for mobile app performance and data privacy reveals numerous studies of given research investigations that have delineated attempts to learn about the complexities of FL. Scholarly research also emphasizes FL's capacity to transform the learning process, focusing on the issues that are pertinent to the development of mobile applications, such as data protection laws and application efficiency. The concepts to be explained include client-server architecture, federated averaging algorithms, and privacy-preserving techniques, about which FL contributes to enabling collaborative learning among non-centralized devices while maintaining users' data confidentiality [5]. In addition to that, all the FL applications across the healthcare domain, finance, and telecommunication industry confirm the effectiveness of this method in real-life problems. In the ever-expanding field of research in this area, possible research opportunities for the future, including Algorithm improvement, Systems scalability, and Federated systems, could serve as promising directions for improving FL methodology and solutions.

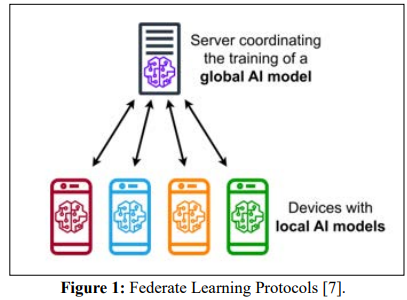

FL occupies a unique and highly important place in the context of the development of new approaches in the sphere of machine learning and artificial intelligence (as shown in figure 1), filling the role of the new efficient paradigm for model training based on decentralized devices and, at the same time, protecting the sacredness of personal data [6]. This paper aims to explore the nature of FL, how it has developed over the space of three years, and its applications across various fields. It has drawn its progression from the steady flow of innovation in privacy- preserving methodologies, optimizations of algorithms, and basically the growth of availability of open source platforms, all of which are helpful in defining the broad range of possibilities of DL. It is crucial to comprehend FL's development process if one is to establish its present state and define the future course of FL in the context of the growing complexity of distributed systems.

Key concepts and components of Federated Learning (FL) in points:

Client-Server Architecture: FL maintains a client-server model where numerous clients, such as the client interface, a mobile device, or an IoT device, work together with the server for model updates. This architecture enables very frequent and efficiently structured model updates, in which each participating client contributes its local data to build the global model without exposing the raw data outside [8]. The central server basically helps collect updates from the models that are distributed to different clients, hence making collaborative learning possible while maintaining the privacy of the data.

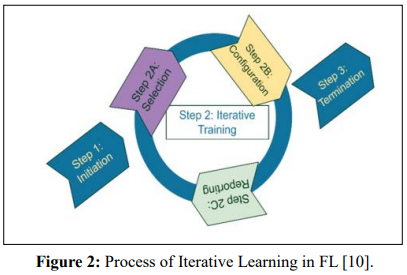

Federated Averaging (FedAvg): FedAvg can be regarded as a foundation for the FL framework, as it allows the collection of model updates from clients while keeping the data local [9]. This algorithm involves several iterative steps: Like, every client receives a model from the central server, which is the shared model all clients start with. Then, the clients individually perform local model training on their received datasets and develop new model parameters. These local updates are securely aggregated by the central server, usually via methods like federated averaging, where the received updates are averaged to come up with a better model. This works because after the global model has been updated, it is returned to the clients for more rounds of training. This is done by using FedAvg, where the actual data is never transmitted to the server, but model parameters are uploaded (as shown in figure 2).

Privacy-Preserving Techniques: Different privacy- preserving approaches used in FL to ensure that the user data are secured during the model's training are as follows. All these techniques are very important in maintaining data security and, more importantly, meeting the set rules and regulations in dispersed learning environments. The differential Privacy technique randomizes the learning process to ensure that there is no leakage of information from individual samples used in the learning process [11]. Thus, adding noise to the model updates and differential privacy prevents the training process from disclosing sensitive information about the user. To ensure that the client's data is kept secret, the use of secure aggregation protocols comes in handy by aggregating model updates of the clients. Such procedures let the central server sum the encrypted model updates without getting the raw data, which will infringe on the user's confidentiality.

FL has seen widespread adoption of applications and usage in various fields and domains, proving its capability and viability in practice [1]. Some notable applications and use cases of FL include:

Healthcare: Federated Learning leverages distributed healthcare institutions' cooperation on model training with sensitive medical data for healthcare analytics and personalized medicine breakthroughs. FL allows healthcare organizations to harness the patient's own data that originated from different sources and, in a way, maintain data confidentiality. Using information from heterogeneous data sources, FL enables the construction of decision- making models for disease diagnosis, treatment planning, and prognosis of patient outcomes. In addition, FL helps in improving the possibilities for the creation of individual treatment management plans for client patients and the organization of healthcare services, hence growing the efficacy of the process and the results of help and treatment for patients [1].

IoT Devices: In particular, Federated Learning allows smart IoT devices to collaboratively learn from distributed data sources and make intelligent decisions as well as predict failures in smart environments. FL can effectively enable IoT devices to train machine learning models with locally produced data simultaneously without the need to send the actual data to third parties. Thus, the implementation of FL for learning from distributed sources of information enables IoT devices to enhance performance and optimality in real- time conditions. The use of FL in IoT involves the analysis of data from many sensors that are situated in various gadgets to be able to predict signs of failure or breakdown of equipment to ensure effective maintenance and recurrent problems are prevented [1].

Mobile App Development: Federated Learning, which defines a new approach to the development of mobile applications, is distinguished by the enhancement of application performance through decentralized model training and by the protection of users' data from centralization, thereby enhancing users' experiences and facilitating compliance with legal requirements [1]. FL helps mobile application developers fine-tune machine learning models locally on the user's device without transferring data to another server. Due to the model updates being distributed across the participating devices and the local updates being aggregated in a privacy-preserving fashion, FL reduces the amount of central data aggregation and processing that is required. It not only benefits the training process of the model but also increases the performance of the mobile application while at the same time not infringing on data privacy or regulatory compliance to encourage the users' trust and satisfaction.

The themed analysis of Federated Learning (FL) and the performance of mobile apps in the setting of current data- focused technologies and mobile app development constitute a significant study. The focus of this analysis is on laying out the complex relationship between FL, data privatization, and performance improvement across the spectrum of mobile applications. By exploring media richness and other related elements, the findings of this study explain how FL contributes to mobile app development and maintains users' data privacy. It is our hope that through this thematic analysis, we will add to the literature on the effects of FL on the performance of mobile applications and extend the discussion on FL applicability in distributed systems.

Thematic analysis is a process used to make comparisons and derive themes [12]. This approach is especially valuable when studying very diverse and often nonlinear processes like FL and the effects of this technology on the performance of mobile applications. We will now proceed to observe and analyze the case study on FL and mobile app challenges in a thematic manner, with a view to identifying the major issues and trends present therein.

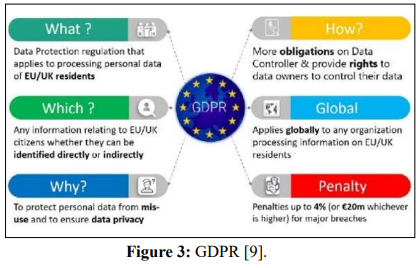

This case study focuses on Federated Learning (FL) and its application in addressing issues arising from the development of mobile applications, mainly data privacy and performance [1]. Thus, it highlights the importance of such data governance regulations as GDPR and how data silos can limit opportunities to leverage multiple training data needed for app improvement. FL appears as a decentralized approach in which decentralized devices collaboratively train a shared model while respecting user privacy by applying differential privacy and secure summation methods. The effectiveness of FL is evident in the cases of healthcare, finance, and telecommunications, which suggests the possibility of FL being, used in future applications. The case study also mentions possible directions for future FL research, pointing at the development possibilities for scalability and the improvement of algorithms. Figure 3 illustrates these roles and outlines the responsibilities of these roles, particularly the Data Controllers are obliged to protect data, while Data Processors must process data in accordance with the Data Controller’s instructions; Data Subjects have rights over their data.

Application to Federated Learning according to the case study: Data Privacy and Governance

Challenges in Data Privacy: The case study focuses on the importance of data management and the necessity of adhering to laws like GDPR in the context of mobile app development. It explains that adherence to these rules and regulations is raising issues of data privacy and sharing [5].

FL as a Solution: Federated Learning is characterized as a means to overcome data privacy issues in the process of creating mobile apps. FL assists in tuning models across a number of devices in work collectively without sharing the raw data that might breach user privacy, hence meeting the privacy requirements.

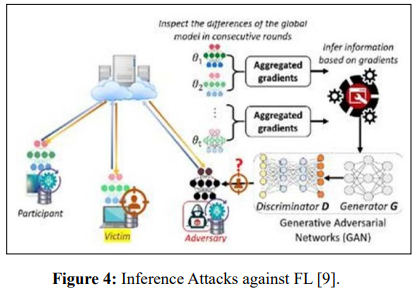

Figure 4 demonstrated the conceptual diagram of inference attacks against FL using GANs at a high level of abstraction. In these attacks, GANs are used to exploit the vulnerabilities in the FL model and allow the attackers to deduce information from the shared model updates thus violating the data privacy.

Issues with Data Silos: The corresponding case describes the issues arising from the presence of data silos in the mobile application development context, which hinders access to variant and profuse training data. The original article then explains the negative impact that arises from the limited exchange of data and hinders the functioning of machine learning models and applications.

FL's Role: The problem of data silos is solved by Federated Learning since it allows a number of separated devices to be coordinated in model training without transmitting the raw data among all of them [8]. In particular, this makes more data available for the models used in machine learning and improves their performance as well as the scope of the given mobile applications.

FL's Efficiency: The attribute of decentralization is introduced as one of the peculiarities that can strengthen the performance of mobile apps when using Federated Learning [2]. The case study explains how FL enhances model training efficiency since updates of the model are shared with every connected device and received as private updates from other devices.

Applications in Industrial Engineering: Several case studies and example applications show that FL can facilitate the enhancement of the performance of products, such as mobile applications, but it also protects data and patients' privacy. Pertaining to this, FL has been implemented in various domains such as healthcare, finance, and telecommunication, among others [1].

Techniques Used in FL: The case also presents several privacy methods that are used in Federated Learning approaches, including differential computing and security assembling [9]. These techniques guarantee the privacy of the user data during model training to mitigate issues on data use and regulatory compliance.

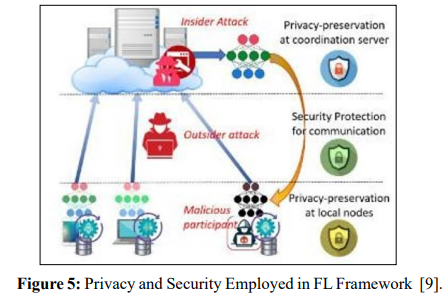

Addressing Privacy Concerns: Therefore, with the help of privacy protection measures, Federated Learning minimizes privacy and legal risks to become applicable for many privacy- sensitive fields, including healthcare and mobile environments [3]. Figure 5 shows the state of privacy and security in a FL architecture based on the measures put in place. Some of the measures include encryption of the data, aggregation of the model updates and Differential privacy methods.

This thematic analysis is useful in shedding light on the advantages of Federated Learning and its contribution to handling the issues with data privacy and the efficiency of mobile applications. While exploring the case, several themes have been found, and their significance for mobile app development is discussed below. Thus, the study's results also demonstrate the usefulness of FL as a privacy- preserving framework and identify FL applications for improving mobile app efficiency and adhering to data protection legislation. This paper offers insights concerning the features of FL and its potential in industrial engineering and data science to advance subsequent studies and scenarios in different sectors.

A thematic analysis of the credentials provided significant information about the implementation and potential of Federated Learning in handling data governance, data privacy policies, and the performance of the mobile application. FL presents itself as a revolutionary approach to solving the problem as it enables distribution model training across diverse devices and keeps the users' data safe at the same time. The identified themes include FL's effectiveness in enhancing the performance of the applications, subscribing to the organization's problem of data silos, and employing privacy-preserving approaches. Such evidence establishes FL as a valuable application in several domains, such as health care and finance, where it has shown effectiveness in optimizing app performance without infringing on users' data protection rights. The authors focus on future work that extends FL for more research and application on distributed systems for developing practical mobile apps, which can apply FL for improving machine learning algorithms' performance, scalability, and optimization.

The thematic analysis unveiled several important themes and implications with regard to FL and its impact on the performance of mobile applications and data privacy. Such themes include the importance of FL in dealing with data governance and privacy regulations, the effectiveness of FL in dealing with silos in data, how FL offers an optimal solution to mobile applications, and the privacy preservation methods considered in FL. Out of an explorative analysis of the case study presented above, the following themes were identified as critical for anticipating the change potential of FL in distributed systems and mobile app development.

These results reinforce the need to employ FL as a reliable privacy- preserving approach to the contemporary context of advanced technological advancements in data processing. As the means of performing model training across multiple distributed devices while protecting users' data, FL presents a promising solution to the challenges of mobile app creation. This discussion talks about the FL's significance and knowledge for different sectors, specifically healthcare, finance, and telecom, where FL has beneficial outcomes to enhance the app's performance while also protecting consumer data privacy. Further, the discussion provides insight into the possible provisions for further FL and its future work prospective and practical applications, discussing the need to progress in searching for the best methods for further optimization, the influence of the giant scales, and efficient systems to implement FL. Besides, the findings and discussion can enrich the knowledge about FL and its application for developing distributed systems and/or mobile apps in the study area.

In conclusion, this review has enlightened the reader on the importance of FL in addressing these complex challenges in mobile application development, especially in data privacy and performance. Here FL can be seen as the prospective solution to the problems of data governance regulations like GDPR or the issue of data silos caused by the distributed nature of FL through its inherent attributes like privacy preservation. Besides, the ability of FL to train models cooperatively with multiple devices and protect the privacy of user information makes it useful in improving the performance of an app, as well as promoting data responsibility. Furthermore, the following practical suggestions indicate that the organization needs to pay attention toward the actual implementation of FL: - User awareness and company transparency have to be adopted – The security element has to be invested – Collaboration with social networking sites has to be initiated. In addition, there is a need to pursue further research efforts aimed at enhancing algorithmic optimization's development and incorporating new privacy-preserving solutions into FL, as well as evaluating its efficacy in new areas to work towards realizing its potential to trigger the creation of breakthrough innovations in the mobile application and distributed system space. In these areas, FL, as a new computing paradigm, is ready to transform the direction of data science applications and guarantee the proper use of data in the context of growing globalization.

The review has demystified the need for Federated Learning (FL) in order to solve some of the most daunting challenges associated with the development of today's iOS and Android apps, especially regarding their handling of users' data and their efficiency. FL solves the problem of data governance regulation, such as GDPR, by providing a decentralized approach to train a shared model cooperatively with the help of distributed devices while the privacy of the users' data is protected through differential privacy and secure aggregation. Taking these FL applications in various domains such as health care, finance, and telecommunication, some of the findings have revealed how effective FL works in addressing the issue of data silos, improving app performance and navigation, and being responsive to modern data privacy legislation.

For organizations embarking on the implementation of FL in mobile app development, several practical recommendations emerge from the findings:

Future research endeavors in the field of Federated Learning should focus on advancing the following key areas: