Federated Learning: Enhancing Data Privacy and Security in Machine Learning through Decentralized Training Paradigms

Author(s): Abhijit Joshi

Abstract

Federated learning represents a transformative paradigm in the realm of machine learning by enabling models to be trained across multiple decentralized devices or servers holding local data samples without exchanging them. This approach significantly enhances data privacy and security by ensuring that sensitive information remains localized while still contributing to global model improvements. This paper delves into the technical intricacies of federated learning, examining its architecture, methodologies, and algorithms. Furthermore, it explores various applications across different industries, evaluates the challenges in implementation, and discusses potential solutions to overcome these obstacles. The impact of federated learning on data privacy and security is thoroughly analyzed, providing insights into its future scope and areas for further research.

Introduction

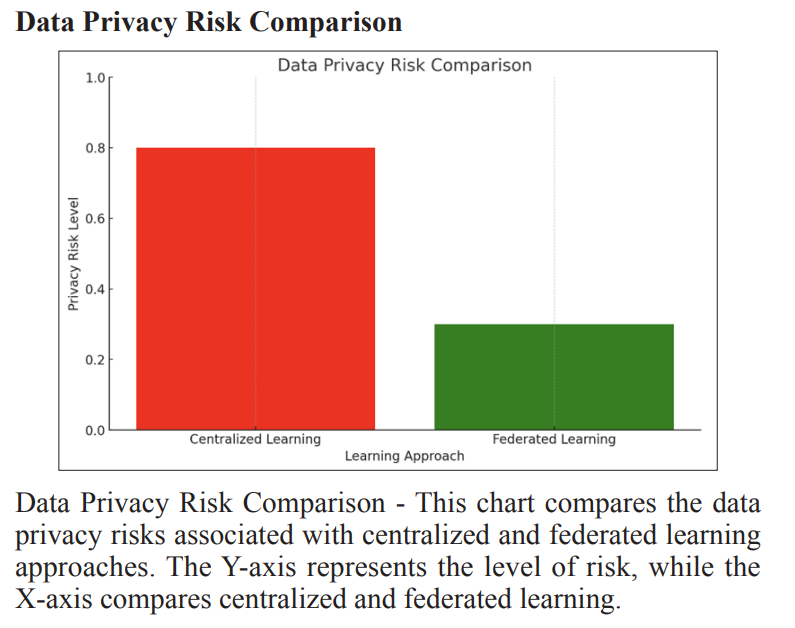

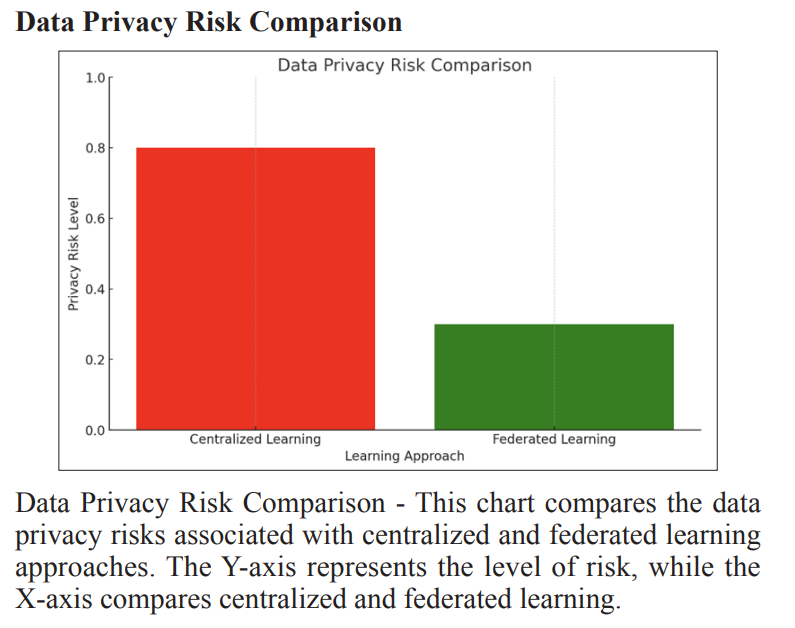

In the era of big data and machine learning, the need for robust data privacy and security mechanisms has become paramount. Traditional centralized machine learning models require data to be aggregated in a central location for training, posing significant privacy risks. Federated learning offers a novel solution by enabling the training of machine learning models directly on devices where data is generated. This decentralized approach not only mitigates privacy concerns but also reduces latency and enhances the scalability of machine learning systems.

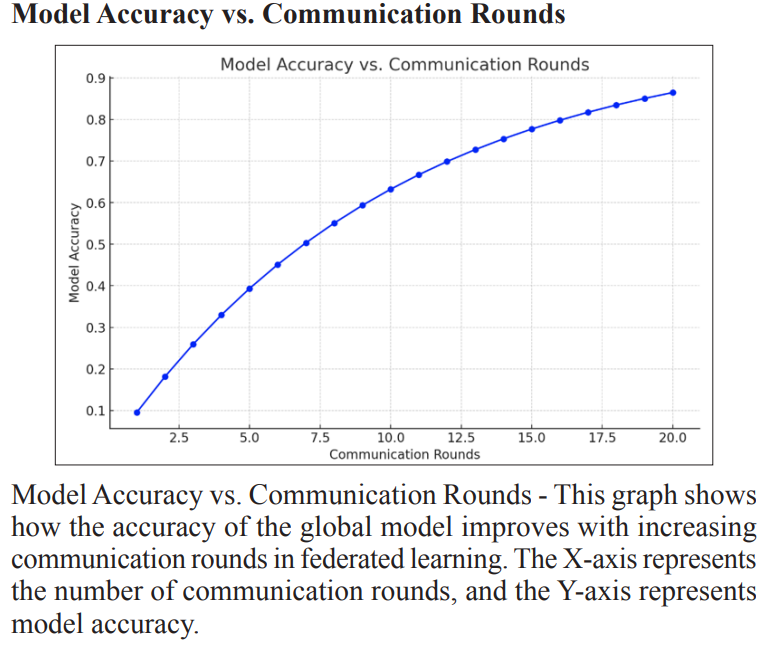

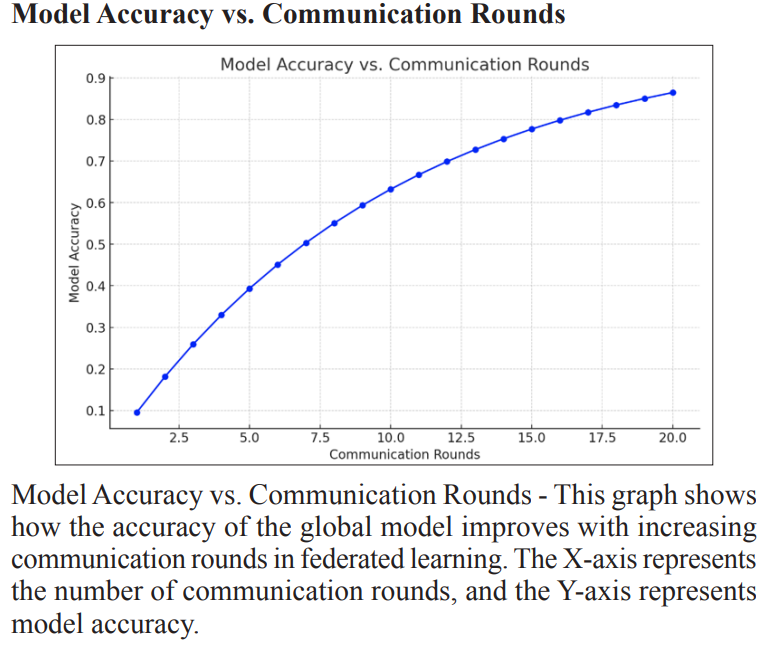

Federated learning involves multiple devices (clients) that collaboratively train a model under the orchestration of a central server. Each client computes updates to the model based on local data, and only these updates, rather than the raw data, are shared with the server. The server aggregates these updates to improve the global model, which is then redistributed to the clients. This process is iterative and continues until the model converges to an optimal state.

This paper provides a comprehensive analysis of federated learning, focusing on its potential to enhance data privacy and security. We explore the underlying architecture, key methodologies, and algorithms that drive federated learning. Additionally, we examine its applications across various industries, discuss implementation challenges, and propose solutions to address these challenges.

Problem Statement

In traditional ML, the need to aggregate large datasets in a central location for model training raises several critical issues:

- Data Privacy: Centralized data storage increases the risk of data breaches and unauthorized access. Sensitive information, such as personal health records or financial transactions, becomes vulnerable when aggregated in one place.

- Security: The centralization of data creates a single point of Cyberattacks targeting central repositories can lead to significant data loss and compromise.

- Regulatory Compliance: Regulations like GDPR and CCPA impose strict requirements on data handling, consent, and user Compliance with these regulations becomes complex when data is centralized.

- Latency and Bandwidth: Transferring large volumes of data to a central server can result in high latency and bandwidth consumption, especially in applications requiring real-time or near-real-time processing.

- Scalability: Centralized ML systems may struggle to scale efficiently as the volume of data and the number of data sources

Federated Learning addresses these issues by enabling decentralized model training. This approach ensures that data remains localized on the devices where it is generated, significantly enhancing privacy and security. Additionally, FL leverages the computational capabilities of edge devices, thereby reducing the need for data transfer and improving scalability.

Solution

Federated Learning operates on a decentralized architecture, comprising several key components and processes that collaboratively train ML models without exchanging raw data. The core elements of FL include:

- Clients: These are the devices or nodes (e.g., smartphones, IoT devices) that hold local data and participate in the training Each client performs local computations and updates the model based on its data.

- Server: The central coordinating entity (e.g., a cloud server) that orchestrates the training process. The server collects model updates from clients, aggregates them, and redistributes the updated model to the clients.

- Communication Protocol: A secure mechanism for transmitting model updates between clients and the server. Ensuring secure and efficient communication is crucial for the integrity and performance of the FL Process.

- Aggregation Algorithm: The method used to combine model updates from multiple clients into a single global model. Federated Averaging (FedAvg) is a commonly used algorithm that computes the weighted average of client updates.

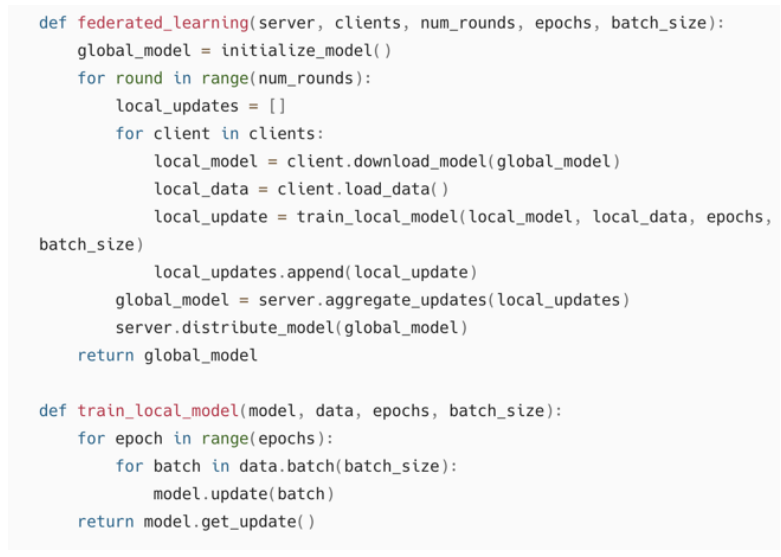

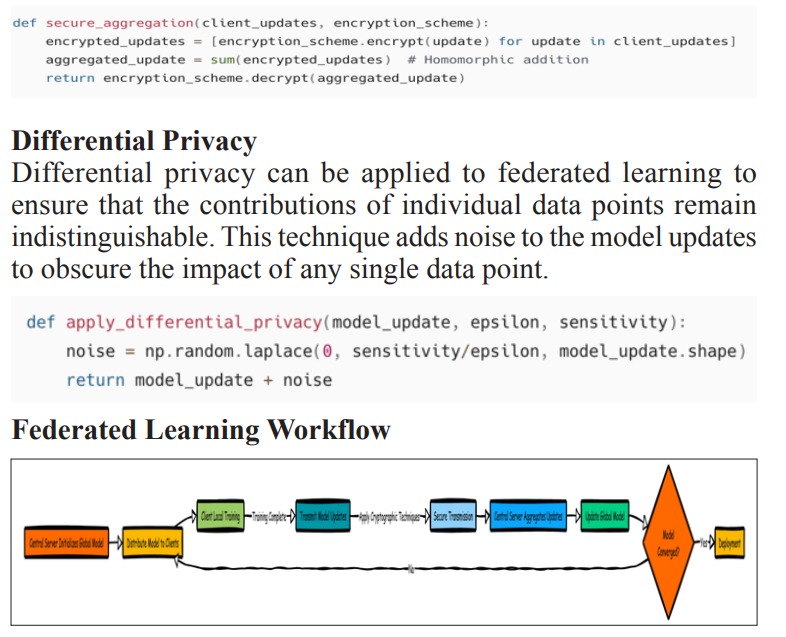

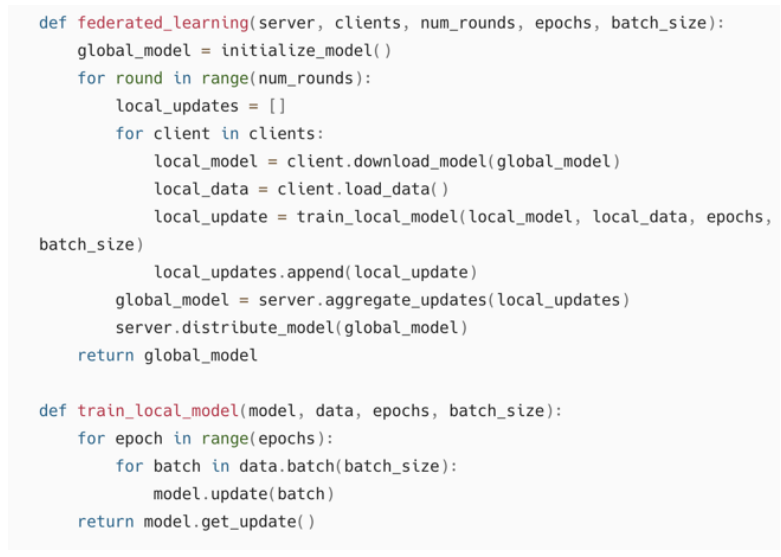

Federated Learning Process

The FL process involves several iterative steps:

- Initialization: The server initializes the global model and distributes it to all participating clients.

- Local Training: Each client trains the model on its local data for a specified number of epochs and computes model updates (gradients or weight updates).

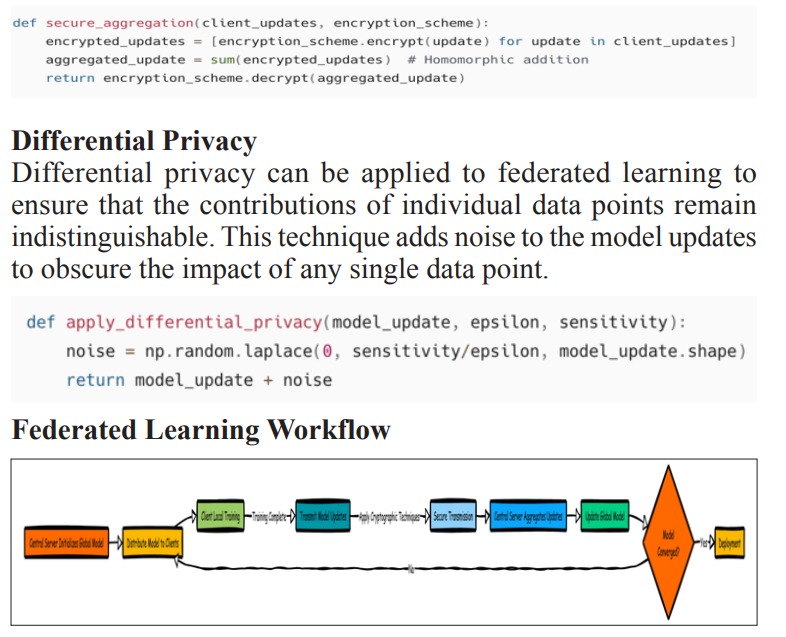

- UpdateTransmission: Clients send their computed updates to the server. To enhance privacy, techniques like secure aggregation or differential privacy can be employed.

- Aggregation: The server aggregates the received updates to form an improved global model. The aggregation can involve simple averaging or more complex algorithms to handle non- iid data distributions.

- Redistribution: The updated global model is redistributed to the clients for the next round of local training.

- Iteration: Steps 2-5 are repeated until the global model converges to an optimal state.

Pseudocode for Federated Learning

Secure Aggregation Protocol

To ensure the privacy of model updates during transmission, secure aggregation protocols can be used. One such protocol is based on homomorphic encryption, which allows computations to be performed on encrypted data without decrypting it. This ensures that the server cannot access individual model updates, only the aggregated result.

Uses

Federated learning has the potential to revolutionize multiple industries by providing a secure and efficient way to leverage decentralized data. Here are some detailed applications across different sectors:

Healthcare

- Collaborative Disease Prediction Models: Hospitals and medical research centers can collaboratively train predictive models on patient data without sharing sensitive information. For instance, federated learning can be used to develop a model for predicting disease outbreaks based on patient records from various hospitals. This enables the creation of a robust model that benefits from a wide range of data while ensuring patient privacy.

- Personalized Treatment Plans: Federated learning can help in creating personalized treatment plans by training models on local patient data. Each hospital or clinic can contribute to a global model that improves over time without exposing individual patient data.

Finance

- Fraud Detection: Financial institutions can develop fraud detection models using transaction data from multiple banks. Federated learning allows these institutions to collaboratively improve their fraud detection capabilities without exposing customer data. This can lead to more accurate detection of fraudulent activities by leveraging diverse datasets.

- Risk Management: Banks can use federated learning to improve their risk management By training on decentralized data from various sources, banks can better assess and manage risks associated with loans, investments, and other financial activities.

Smart Devices

- Personalized User Experiences: Federated learning enables the development of personalized models on smart devices such as smartphones and IoT devices. For example, a predictive text model can be trained on a user's typing data locally and improve over time without sending the data to a central server.

- Health Monitoring: Wearable devices can use federated learning to improve health monitoring algorithms by training on local data. This can lead to more accurate and personalized health insights without compromising user privacy.

Autonomous Vehicles

- Collaborative Learning for Self-Driving Cars: Autonomous vehicles can share insights from their local driving data to improve the overall model's performance. This collaborative learning approach enhances the safety and efficiency of self- driving cars by enabling them to learn from diverse driving environments and scenarios without sharing raw data.

- Traffic Management: Federated learning can be used to optimize traffic management systems by training models on data from various sources, including individual vehicles and traffic sensors. This can lead to better traffic flow and reduced congestion.

Telecommunications

- Network Optimization: Telecom companies can use federated learning to develop network optimization By leveraging data from distributed base stations, these models can optimize network performance, improve resource allocation, and enhance user experiences.

- Predictive Maintenance: Federated learning can help in predicting equipment failures and scheduling maintenance activities. By training models on local data from various network components, telecom companies can identify potential issues before they lead to significant problems.

Impact

The impact of federated learning on data privacy, security, and machine learning performance is profound. Here are some of the key benefits:

Enhanced Privacy

- Data Localization: By keeping data on local devices, federated learning significantly reduces the risk of data breaches and unauthorized access. Sensitive information remains with the data owner, enhancing overall data privacy.

- Privacy-Preserving Techniques: Federated learning can incorporate advanced privacy-preserving techniques such as differential privacy and secure multiparty computation, further enhancing the security of the training process.

Regulatory Compliance

- GDPR and CCPA Compliance: Federated learning facilitates compliance with data protection regulations by minimizing the need to transfer sensitive This approach aligns with the principles of data minimization and user consent, making it easier for organizations to comply with regulations like GDPR and CCPA.

- Auditability and Transparency: The decentralized nature of federated learning allows for better auditability and transparency in data handling processes. Organizations can demonstrate their commitment to data privacy and security to regulators and stakeholders.

Scalability and Efficiency

- Decentralized Processing: Federated learning leverages the computational power of edge devices, reducing the load on central servers and improving the scalability of ML This decentralized processing approach can handle the growing volume of data generated by modern applications.

- Reduced Latency: By processing data locally, federated learning reduces the need for frequent data transfers, leading to faster model updates and lower latency. This is particularly important for real-time applications such as autonomous driving and smart devices.

Cost Efficiency

- LowerDataTransferCosts: Federated learning minimizes the need for large-scale data transfers, resulting in lower costs associated with data storage and Organizations can leverage existing local resources more effectively, reducing the overall cost of data management.

- Efficient Resource Utilization: By distributing the computational load across multiple devices, federated learning ensures efficient utilization of available resources. This can lead to significant savings for organizations managing large- scale data infrastructure.

Scope

The scope of federated learning is vast and spans across various dimensions, from algorithm development to industry adoption. Here are some key areas to consider:

Algorithm Development

- Aggregation Algorithms: Improving aggregation algorithms to enhance the efficiency and accuracy of model Research in this area focuses on developing methods that can handle heterogeneous and non-iid (independent and identically distributed) data distributions.

- Optimization Techniques: Developing optimization techniques to improve the convergence speed and performance of federated learning models. This includes exploring adaptive learning rates, gradient compression, and other methods to enhance the training process.

Security Protocols

- Cryptographic Techniques: Developing advanced cryptographic techniques to secure model updates and aggregation processes. This includes exploring methods such as homomorphic encryption, secure multiparty computation, and differential privacy to protect data during transmission and aggregation.

- Authentication and Authorization: Implementing robust authentication and authorization mechanisms to ensure that only authorized clients participate in the federated learning This helps prevent malicious attacks and ensures the integrity of the training process.

Hardware Integration

- Edge Computing Devices: Enhancing the compatibility of federated learning frameworks with different hardware platforms, particularly edge computing devices. This involves optimizing algorithms for resource-constrained devices and ensuring efficient utilization of computational resources.

- Specialized Hardware: Exploring the use of specialized hardware, such as TPUs (Tensor Processing Units) and GPUs (Graphics Processing Units), to accelerate the training process and improve the performance of federated learning models.

Regulatory Compliance

- Data Protection Regulations: Ensuring that federated learning implementations adhere to evolving data protection This includes developing frameworks for auditing and verifying compliance with privacy standards, as well as creating guidelines for implementing federated learning in compliance with regulatory requirements.

- Ethical Considerations: Addressing ethical considerations related to data privacy, consent, and fairness in federated This involves developing policies and practices that ensure ethical data handling and promote trust among stakeholders.

Industry Adoption

- Standardization and Best Practices: Promoting the adoption of federated learning across different industries through standardization and best practices. This includes creating guidelines for implementing federated learning, demonstrating its benefits through real-world use cases, and fostering collaboration among industry stake holders.

- Interoperability: Ensuring interoperability between different federated learning frameworks and This involves developing standards and protocols that enable seamless integration and collaboration among diverse systems and organizations.

Conclusion

Federated learning represents a significant advancement in the field of machine learning by addressing critical issues related to data privacy and security. By enabling decentralized training of models on local data, federated learning minimizes the risk of data breaches and unauthorized access. The collaborative nature of federated learning enhances the scalability and efficiency of machine learning systems, making it a valuable approach for various applications.

Despite its numerous benefits, the implementation of federated learning is not without challenges. Ensuring secure communication, efficient aggregation, and compliance with regulatory frameworks are key areas that require ongoing research and development. However, the potential advantages of federated learning in enhancing data privacy, security, and machine learning performance make it a promising approach in the age of big data and machine learning [1-16].

Future Research Area

Several areas of research can further advance the field of federated learning:

- Advanced Aggregation Algorithms: Developing more sophisticated aggregation algorithms that can handle heterogeneous data and non-iid (independent and identically distributed) data This includes exploring adaptive aggregation methods that can dynamically adjust to the characteristics of the data.

- Federated Learning with Differential Privacy: Integrating differential privacy techniques to provide formal privacy guarantees while preserving model This involves developing methods to balance privacy and utility, ensuring that federated learning models are both effective and privacy-preserving.

- Secure Multiparty Computation: Enhancing the security of federated learning by incorporating secure multiparty computation techniques to protect model updates during This includes exploring methods for efficient and scalable secure computation that can handle the demands of large-scale federated learning systems.

- Federated Learning on Resource-Constrained Devices: Optimizing federated learning frameworks for resource- constrained devices such as IoT sensors and edge This involves developing lightweight algorithms and protocols that can operate efficiently on devices with limited computational and energy resources.

- Cross-Silo Federated Learning: Exploring federated learning scenarios involving multiple organizations (cross- silo) to enable collaborative model training while preserving organizational data This includes developing frameworks for secure and efficient collaboration among diverse stakeholders, as well as addressing challenges related to data heterogeneity and regulatory compliance.

References

- Kairouz P, McMahan HB, Avent B, Bellet A, Bennis M, Bhagoji AN, et al. (2021) Advances and open problems in federated learning. Foundations and Trends® in Machine Learning 4: 1-210.

- Li T, Sahu AK, Talwalkar A, Smith V (2020) Federated learning: Challenges, methods, and future directions. IEEE Signal Processing Magazine 37: 50-60.

- Bonawitz K, Eichner H, Grieskamp W, Huba D, Ingerman A, et (2019) Towards federated learning at scale: System design. Proc. of SysML Conference.

- Yang Q, Liu Y, Chen T, Tong Y (2019) Federated machine learning: Concept and applications. ACM Transactions on Intelligent Systems and Technology (TIST) 10: 1-19.

- McMahan HB, Moore E, Ramage D, Hampson S (2017) Communication-efficient learning of deep networks from decentralized data. Proc of AISTATS 3.

- Zhao Y, Li M, Lai L, Suda N, Civin D, et al. (2018) Federated learning with non-IID arXiv preprint arXiv:1806.00582.

- Geyer RC, Klein T, Nabi M (2017) Differentially private federated learning: A client level arXiv preprint arXiv:1712.07557.

- Hard A, Rao K, Mathews R, Ramaswamy S, Beaufays F, et (2018) Federated learning for mobile keyboard prediction. arXiv preprint arXiv:1811.03604.

- Smith V, Chiang CK, Sanjabi M, Talwalkar A (2017) Federated multi-task Proc of NeurIPS.

- Hu C, Jiang J, Wang Z (2019) Decentralized federated learning: A segmented gossip approach, arXiv preprint arXiv:2002.09826.

- Wang H, Yurochkin M, Sun Y, Papailiopoulos D, Khazaeni Y (2020) Federated learning with matched averaging. Proc of ICLR.

- Jeong E, Oh S, Kim H, Park J, Bennis M, et al. (2018) Communication-efficient on-device machine learning: Federated distillation and augmentation under non-IID private Proc. of NeurIPS.

- Mohri M, Sivek G, Suresh AT (2019) Agnostic federated Proc. of ICML.

- Lyu L, Yu H, Yang Q (2020) Threats to federated learning: A arXiv preprint arXiv:2003.02133.

- Xie C, Koyejo O, Gupta I (2019) Zeno: Distributed stochastic gradient descent with suspicion-based fault-tolerance. Proc of ICML.

- Konecny J, McMahan HB, Yu FX, Richtárik P, Suresh AT, et al. (2016) Federated learning: Strategies for improving communication efficiency. Proc of NeurIPS Workshop on Private Multi-Party Machine

View PDF