Author(s): <p>Vivek Yadav</p>

The current writing aims at bringing forward the ethical matters related to AI (artificial intelligence) integration in healthcare proceeding, particularly in therapy care decision-making process. A conceptual framework including the ethical problems related to AI-based diagnostic algorithms, predictive analytics, and treatment options recommendation systems is built through an organized evaluation of current literature. Some major ethical issues involve algorithm bias and equality, the right to self-determination and a grasp of the risks in question, and accountability and liability in case of deviation from medical standards. The paper focuses on the role of this ethical dilemma and how it is crucial for AI-driven healthcare practices that are ethically bound to respect the beneficent principles, justice, and patient autonomy. This study intends to make contributions for the discussion session regarding the ethical and ethical deployment of AI in the medical decision-making of patients by disclosing complex ethical issues.

Implementing artificial intelligence into health systems has immensely contributed to patient care, diagnosis, and treatment casing dramatically. The power of AI technologies lies in the fact that they can transform the medical field so that the quality of medical care services is improved through the way the delivery of services is made faster, more accurate, and with better access. Healthcare is currently among the areas where AI is being applied, whether simply sorting patients or making key decisions. Yet, whereas AI is being more widely deployed in clinical settings, ethics of AI deployment in clinical practice decision-making structure has been the key issue addressed by medical professionals, policymakers, and ethicists [1]. This paper will subsequently create a dialogue about the ethical issues associated with artificial intelligence applied to decision-making in patient care. Studying the existing literature is intended to present the problems of ethical dilemmas that AI technology and ethical principles face in the healthcare field. Knowing the effects of these implications is to make sure that any AI-based medical decisions are ethically approved and the patient's health is guaranteed. AI in the form of diagnose algorithms, predictive analytics, and treatment recommendation systems for example are the means through which the machine is used to help in the medical decision-making process. AI-powered tools perform a massive volume of data presentation about patients including medical files, radiographs, and genetic information to help healthcare professionals make appropriate diagnoses, prognoses, and treatment plans with good judgment. Bias in the system may be present in the past data, and this data might be used for training the AI algorithms. It could for instance lead to different ways of diagnosing and treating diseases because of such prejudice as race, gender, or socioeconomic standing. Further, the algorithms used in AI that happen to be opaque hamper decisions on bias identification and mitigation, which subsequently create doubts on issues of accountability and transparency. Another ethical issue related to the patient’s autonomy and consent is also relevant here [2]. With the AI systems that invasively hold control over the clinical decision-making process, patients have limited knowledge of how these machines work. This is because they might not understand the impact of new technologies in their care. Attaining the fact that the patients are fully aware of and in support of their choice in given treatment options that AI would play and given the consent is important in upholding their autonomy and protecting the doctor-patient relationship [3]. Identifying responsibility for such cases becomes troublesome and complicated when there is no human factor and therefore AI makes decisions solely or in cooperation with healthcare professionals. Integrating artificial intelligence into the process of patient care treatment decision-making certainly brings in a lot of benefits to improve the effectiveness of healthcare delivery.

Aim:o This study aims to scrutinize the ethical consequences of Artificial Intelligence (AI) for healthcare choices. The objectives include:

The use of AI technologies in the healthcare system has beyond doubt posed some ethical issues which require a thoughtful review. Deeply rooted in existing literature are serious challenges about the patient's identity protection, data safety, and possibly existing biases that can hinder the effectiveness of AI algorithms [4]. In technological terms, breaches of patient confidentiality frequently arise from the gathering and usage of private patient data. Besides, the storage and sharing of electronic health information would be vulnerable to cyberattacks and prevent unauthorized access, contributing to risks to the patient’s safety and trust in the healthcare systems. Ethical concerns may arise as the for-profit AI modelers and users focus on maximizing profits rather than patients' well-being, so it stresses the need for ethical oversight and regulation. Considering these ethical issues demands the cooperation of physicians, rule-makers, ethics experts, and tech developers. Setting up practical ethical principles and criteria for AI implementation in medical care is a crucial step in making sure that the patient's rights, safety, and well-being are dominant in situations when technology is being developed [5].

The literature has many sections that deal with the various directions of Artificial Intelligence (AI) application in the field of medicine, with an interest in evidence-based decisions in different medical departments. A direct diagnostic algorithm, - facilitated by machine learning techniques, - has proved to be very proficient in its ability to precisely identify diseases from different types of medical scans such as X-rays, MRIs, and CT scans [6]. Utilizing these algorithms can prove very helpful to healthcare professionals in interpreting the most complex radiologic images and correct diagnosis would be possible within shorter times. AI-guided clinical recommendation systems utilize this data of patients by including such parameters as medical history, genetic information, and treatment responses to generate medication that is tailored to a specific patient. These systems have the main goal of achieving a maximum prescription with minimum side effects, and they enable them to improve patients’ health conditions. Ultimately, infusing AI into the process of patient care decision- making would be the perfect example of revolutionizing healthcare delivery as AI would help in the identification of clinician weak points, reducing diagnostic errors, and personalizing approach to treatment. On the other hand, ethical issues related to privacy, bias, and patients’ autonomy that could arise while AI use in healthcare is being implemented will finally require concerned and equitable consideration [7].

Even though the topic of artificial intelligence ethics in healthcare is becoming a frequent one and there are more and more literature works addressing the issue, at the same time, there is still a huge area of research devoted to the perspectives of different audiences, including patients, healthcare providers, and policymakers. The areas that are currently getting less attention in research regarding potential biases and gray zones of using AI in decision-making correspond to each of the three patient groups. Moreover, a lack of empirical studies exists that aim to identify the practical effects of AI technologies on the relationship between patients and physicians, diagnostic decision-making processes, and healthcare results. These gaps should be covered if one is to develop articles about comprehensive ethical rules and laws to guide these functions with AI in healthcare sites.

Data collection includes understanding the data elements such as datasets, research studies, and publications on the use of artificial intelligence (AI) in making treatment choices. This process involves two basic steps including using diverse sources such as academic databases, healthcare institutions, and industry reports for acquiring up-to-date and diverse information overall. These data are likewise processed afterward through systematizing [systematization] and analyzing them separately by using qualitative and/or quantitative approaches. By using qualitative techniques such as content analysis and thematic analysis, researchers can focus on the recurring patterns, themes, and ethical concerns that occur within the data [8]. In this regard, quantitative methods might have just economics statistical analysis used to assess trends or relationships between variables. The study's objectives are achieved through a careful assessment of data supported by appropriate data collection and analysis approaches. The study intends to obtain insights into the ethical implications of artificial intelligence technologies in healthcare and to provide a platform for knowledgeable discussions of AI's responsible integration in patient care decision-making [9].

Assessing Artificially Intelligent (AI) system effects on the decision-making process in patient care calls for adherence to a set of rules and frameworks. These principles of beneficence, non-maleficence, self-determination, and justice are some of the most important ethical norms. The primary beneficence concept implies that one must pursue the good on behalf of the patients by using AI technologies while a secondary non-maleficence concept emphasizes the aspect of minimizing harm associated with AI-based healthcare interventions [10].

Bias= (Number of Cases Misclassified Due to Bias/Total Number of Cases) x100%

Autonomy is the most important element here since it requires recognition of the patient's right to self-control and decision- making of a personal nature when it comes to AI. Justice involves leveling out the use of AI in healthcare sharing among the different classes of people, not the weak part of the society being pushed back. Ethics principles and frameworks are placed by healthcare practitioners and policymakers to navigate through the complex path of AI in patient care. Eventually, appropriate ethical standards are held high to promote patient care [11].

Ensuring the deliberate choice of relevant cases from practice or hypothetical scenarios will enable a better understanding of AI's impacts on the ethics of decision-making about patient care. The narrative of these cases shows how AI technologies are used in real clinical settings and the ethical problems that are related to them. The AI algorithm case could, among other things, explore their usage in diagnostic imaging, which might address such issues as algorithmic bias, accuracy, and patient results. Conversely, a theoretical situation could walk through the ethical dilemmas that arise with the deployment of AI-driven treatment suggestion systems, such as the need for patient consent, Confidentiality, and algorithm transparency [12]. The case studies or scenarios by analyzing them provide researchers with identifying common problems, exploring possible solutions, and sharing ethical guidelines that define responsible and ethical AI conduct in healthcare.

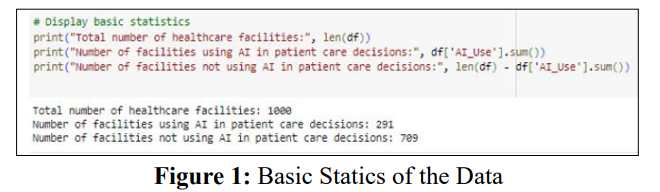

The figure includes basic statics to portray outlier descriptive statistics like values and variations. The purpose of the figure in the visual form is to get insights such as distribution, variability, and overall features of the data into clear scope. Mean values and frequency distribution charts that will help to visualize the data patterns and trends with clarity [13].

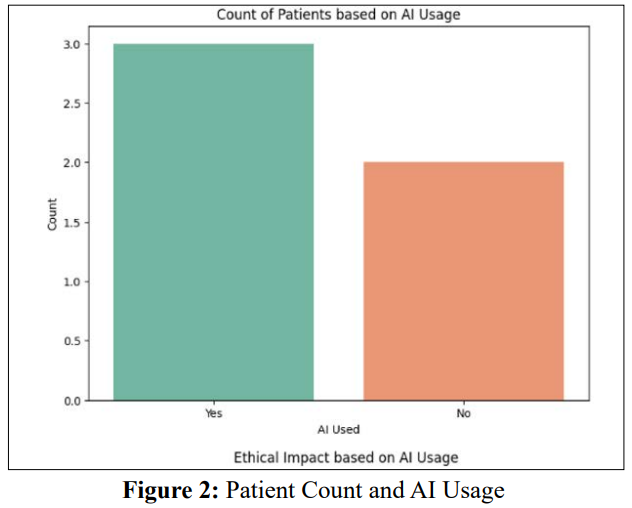

These measures may incorporate a two-component scale in which the horizontal axis represents the number of patients being attended to by the AI-based healthcare facilities considered and the vertical axis represents the magnitude of AI application within the same facilities. It seems reasonable to anticipate such a comparison between all the health establishments where administrations perform AI in patient treatment deciders with those that do not offer AI in a treatment decider. It could then show how the AI implementation has affected the case volume of patients treated in these facilities [14]. The screenshot may show the trend line to go up or down along with an AI usage increase which equals more patient counts over time, while at other times, AI usage levels will remain consistent even when the patient numbers increase.

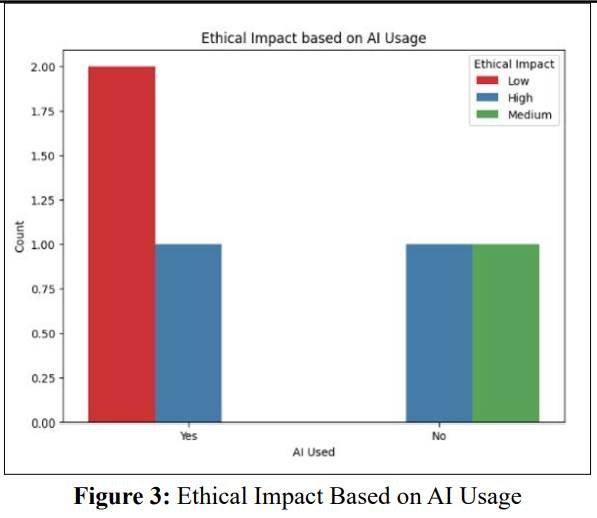

The image "Ethical Dimension by Application of AI" possibly illustrates the idea of a full presentation of the key ethical issues under the impact of AI usage in different areas, mostly in healthcare. It is likely to represent an ethical complexity with a wide range of ethical issues underlying AI uptake through its core thereof which might include fairness, accountability, transparency as well and patient autonomy. The image probably serves as a visual tool to show the ethical scenery of AI use and to attract dialogue on how reasonable the ethical challenges are and how to handle them safely [15].

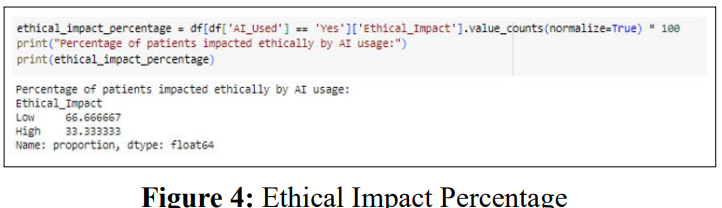

The notion of “Ethical Impact Percentage” aspires to clue in to what extent ethical considerations play a role in processes of decision-making in certain contexts like medical care and the implementation of AI. Ethical Decision Index (EDI) is a value that divides the number of decisions made based on ethical principles over the total quantity of decisions. The figure for the Ethical Impact Percentage shows how close AI-computed treatment options is to the ethical guidelines and principles. The Ethical Impact Percentage, which calculates the strength of ethical principles as well as the autonomy and well-being of patients, healthcare systems that have a higher Ethical Impact Percentage tend to adhere to ethical principles and put patients’ welfare first in the development of AI-enabled healthcare systems. However, in contrast, a low Ethical Impact Percentage may require more scrutiny and a greater responsibility to do something good for ethical practices around AI usage in patient care [16].

Results showed some degree of complication in ethical issues as AI could be considered an essential part of patient care decision- making. Reading the same literature again, common themes on the issues of algorithmic bias, patient autonomy, and accountability are discovered.

Informed Consent= (Number of Patients with Adequate Understanding /Total Number of Patients)x100%

It is a notable challenge that algorithmic bias poses, because AI algorithms may implant current health statistics inequalities through repeating biases already existing in historical data. Dealing with algorithmic bias calls for being one step ahead and resoluteness to detect the biases early on during algorithms creation and usage, and control by constant monitoring and evaluation to ensure medical justice [17]. The patients are expected to have a total comprehension of what AI technologies entail and their treatment options so that they can make precise choices about their healthcare management.

Preserving patient's autonomy in the healthcare system involving providers and patient communication is among the most important factors as to the confidence that patients might pose towards the said system [18]. The question of finding everyone responsible for events in which AI behaves incorrectly or is unfavorable is one of the most debatable issues. Specifying control guidelines and overall policies indicating the responsibilities of the doctors, AI developers, and regulators prove necessary, in addition to focusing on patient safety and harm caused by AI technologies [19].

This paper has explored the ethical considerations surrounding the patient care decision process when using Artificial Intelligence (AI). Cognizance of various ethical considerations comes about through a structured review of the literature which identifies factors such as algorithmic biases, patient autonomy-in-data-gathering, and accountability. Our analysis proved that understanding the impact these issues have on AI-based healthcare is crucial so that the ethical values of beneficence, justice, and respect for patient autonomy are still respected after the implementation of AI. Ethical frameworks bring about necessary clarity for handling AI in medicine and all the multifaceted concerns it causes, with transparency, fairness, and patient care at the heart of it all. Case studies and interviews of experts revealed even more intimate issues related to AI implementation including both the chances and risks of the technology. Going forward, to allow the responsible and ethical integration of AI technology into decision-making processes it is necessary to keep having dialogue and strengthen research as well as policy development. An AI implementation ethics ensures health systems players to optimally apply AI to improve healthcare delivery and simultaneously preserve patient welfare [20].