Author(s): <p>Mounika Kothapalli</p>

Cloud computing has been increasingly growing as it offers various benefits with the way organizations deploy, manage and scale their IT resources. Nevertheless, cost management in cloud environments is still a major challenge due to the complexity of pricing models and dynamically changing cloud services. This paper discusses, inter alia, effective strategies for optimizing cloud costs: right-sizing resources, leveraging reserved and spot instances, autoscaling, and optimum service tiers. Additionally reviews various tools for management and monitoring, including AWS Cost Explorer and Google Cloud Cost Management tools, and the role of automation in reducing operational cost. Also features case studies from various sectors and highlight successful implementations and lessons learned. Further, the challenges and considerations with respect to security, performance trade-offs, and compliance are addressed. Conclusions are drawn with action points for organizations and future directions for research that focus on emerging technologies and their impact on cloud cost optimization.

The management and scaling of applications and services is fundamentally transformed by Cloud computing in an unprecedented way. Cloud computing made the enterprises shift their capital expenditure to operational expenditure as there is a significant reduction in hardware and maintenance costs [1].

With exponential increase in adoption of cloud services there is a strong need to reduce costs, improve efficiency, and foster innovation. One of the key challenges with enterprises is aligning cloud spending with business objectives. Ineffective cost managements lead to redundant expenses, as there is cost to even underutilized resources or suboptimal pricing models. Therefore, there is high importance of adopting cost optimization strategies enabling enterprises to maximize the value of their investments [2].

This paper aims to explore different cost optimization strategies for cloud infrastructure, focusing on Infrastructure as a Service (IaaS) and Platform as a Service (PaaS) models. Different techniques and methodologies that help reduce costs while maintaining optimal performance is discussed as applicable toa most organizations. The strategies are applicable across major cloud platforms like AWS, Azure, and Google cloud platform. The scope encompasses different aspects of cost optimization including pricing models, resource provisioning, workload analysis, cost and security monitoring.

Various pricing models being offered by the cloud service providers to suit the business needs of organizations. These include pay- as-you-go (PAYG), reserved instances and spot instances. The pay-as-you-go model involves charge based on consumption offering flexibility and scalability. This is suitable when you have unpredictable workloads [3].

The reserved instances as shown in Figure 1 involve allocation of specific amount of resources for a defined period (typically 1-3 years) in exchange for lower rates as compared to PAYG pricing model. This is suitable if the workload is predictable.

The spot instances allow users to bid on unused resources at a substantially lower price at the risk of early termination if the spot price exceeds the user’s bid [4].

Several factors influence the costs of cloud computing. Data storage is a primary driver with prices changing based on the type of storage and duration. The data transfer costs involve costs applied during transfer of data in and out of the cloud across geographical regions. The type and size of compute instances along with operating system significantly affects costs. In addition, services like advanced monitoring tools, automated backups, load balancers, databases and additional security features can increase costs [5].

Evaluating TCO especially when migrating to cloud is critical. During migration, the costs does not just include storage and compute resources but also indirect costs such as

Different variables determine the cloud costs. The local economic conditions of that geographic region and availability of resources along with compliance regulations is one key variable. When data transfer involves regions which are spread across geographical boundaries then it affects the cost. The spikes in usage of data along with irregular access patterns can lead to cost variability making budgeting challenging [7].

The key strategy is correct sizing of resources. This includes aligning the allocated resources such as CPU, memory, and storage with the actual usage required. Overprovisioning is typical in cloud setup which leads to unnecessary costs. This can be overcome with regular monitoring of usage patterns, performance metrics and either upscale or downscale the resources and pay only for what you need [3].

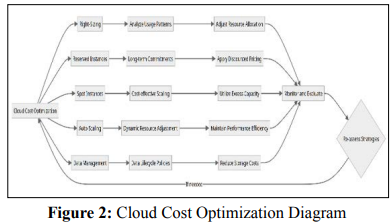

If the workloads are predictable then reserved instances provide lower costs in exchange for a committed usage. On the other hand, as shown in Figure 2 Spot Instances allow users to buy the unused resources for lower price which can highly lower the compute costs. The downside of spot instances is the risk of termination if the market prices exceed the bid price. These are suitable for fault tolerant and flexible workloads [8].

This is a powerful strategy as it dynamically adjusts the number of resources based on the demand of workload. Organizations can avoid spending too much money and thus achieve cost reduction by resizing resources without human input as conditions such as number of users or usage fluctuates. This system guarantees availability of resources on demand hence minimizing wastages while optimizing performance. These include parameters like the number of emails sent per second in a given company’s mail server system, CPU Utilization over time, etc. cloud service companies provide auto-scaling facilities whose configuration is based on predefined criteria and limits [9].

Service selection and tier are the most important things when it comes to cost optimization. For example, choosing between a standard service tier and a premium based on real needs will help avoid excess payments for premium features not needed. Moreover, making comparisons between the same services with different providers will be able to show more cost-effective options, especially if particular features or the expected performance are taken into account [10].

Workload optimization involves several strategies which include proper instance types that will match workload requirements in order not to avoid overprovisioning and waste costs. Leveraging serverless computing, such as Azure Functions, AWS Lambda or Google Cloud Functions, can be cost-effective for event-driven and intermittent workloads because organizations pay only for the actual execution time. Scalable application design by decoupling components and leveraging microservices architecture can allow granular scale and cost optimization.

Proper data management strategies can significantly decrease storage costs. Implementing data lifecycle policies that automatically transition older data into cheaper storage options. Using data compression, which decreases the volume of data stored; and choosing the proper storage class based on access frequency and retrieval times are all critical for cost control. For example, using object storage for infrequently accessed data and block storage for frequently accessed data will optimize [11].

Several steps can help in minimizing networking costs. One will be able to save on the costs associated with data transfer and enhance application performance by using content delivery networks that cache content at edge locations closer to users. The inter-region movement of data should be minimized and the movement of large data via private network connections can also help reduce the costs.

Deep insights into resource usage and cost trends can be gathered with effective tools.

The effective way to enhance efficiency in cloud environments and to reduce costs is automation. Automation in provisioning, configuring, scaling and backups can reduce the risk of human errors, costs. There are various ways to automate. One way is scripting where start and stop of instances can be automated based on the network traffic. Tools like Azure Resource Manager (ARM) can let the user to script and template the entire infrastructure. Another way is scheduled tasks which can be used to cleanup logfiles, creating database snapshots [13].

Healthcare: In order to ensure better data accessibility and patient care, a large regional hospital network switched to a cloud-based electronic health record system with auto-scaling and right-sizing. Such a change reduced their annual operational costs by 30%. The use of reserved instances and auto-scaling features from AWS enabled them to manage unpredictable loads cost-effectively, such as that experienced during flu seasons [14].

Finance: A leading investment bank moved their data analysis operations to the cloud using Google Cloud’s BigQuery and automatic data tiering. They applied data life cycle policies and used spot instances for non-critical batch processing tasks, thereby cutting down their data warehousing costs by 45% while maintaining high performance for real-time analytics [15].

E-Commerce: An international e-commerce platform used Azure Cost Management tools to optimize cloud spend across global operations. By monitoring the cost in great detail and using the pricing calculator offered by Azure, it was able to weed out inefficiencies and make optimal resource allocation, thus saving 25% month-over-month on cloud expenses [16].

Manufacturing: A leading supplier of automotive parts created a hybrid model, hosting applications on-premises in corporate data centers and colocating them to public cloud services to help balance loads with optimal costs. Implementation of serverless computing on their inventory management system resulted in a heavy drop in the operational cost because of the pay-as-you-go pricing model and less need for physical servers [17].

Cost optimization in all spheres will certainly have implications on security and compliance. Multi-tenancy for example may reduce costs but increase the risks of a data breach or privacy violations. Furthermore, data may be stored in less secure but highly cost-effective geographies in an effort to comply with cheap cost options, hence compromising adherence to regulations like GDPR or HIPAA. That means one needs to ensure measures of cost savings do not compromise security protocols or violate regulatory requirements [18].

Cost optimization efforts often lead to performance trade-offs. Using low-cost resources or decreasing the amount of resources could affect the performance and reliability of cloud services. The analysis on the impact of reduced resources on the application’s response time and user experience needs to be assessed. Businesses need to balance cost savings and ensure adequate performance to maintain quality of service and customer satisfaction.

New technologies, including serverless computing and machine learning, are going to set new and huge impacts on cost optimization strategies in cloud computing. Computing without servers can save money by abstracting the server layer; it is charged based only on the execution time, rather than the reserved capacity. On the other hand, machine learning optimizes the utilization of cloud resources by predicting usage patterns and automatically adjusting resources [19].

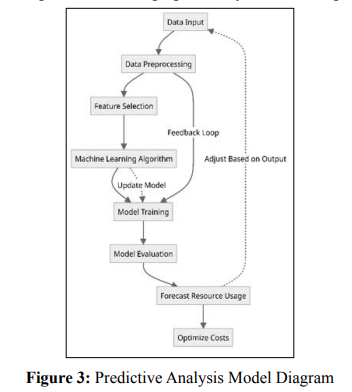

Predictive analytics is increasingly being applied to enhance cost optimization in cloud environments. As shown in Figure 3 by analyzing historical data, predictive models help to forecast future resource needs, thus enabling organizations to scale their resources up or down much in advance of the time of actual requirement. This proactive approach prevents overprovisioning and reduces waste to a great extent, bringing accuracy in cost management.

The paper investigated several strategies to optimize cloud costs: rightsizing resources, reserved and spot instances, auto- scaling, choosing the right services, workload, data, and network optimization. Additionally, reviewed management tools like AWS Cost Explorer, Azure Cost Management, and the role of automation in reducing operational costs. Also presented case studies illustrating successful implementations across industries and highlighted the important lessons learned and best practices.

For organizations that want to optimize their cloud costs, the following are the actionable recommendations that are proposed: Implement Continuous Monitoring and Right-Sizing: Regularly assess and adjust cloud resource allocations based on current and projected needs to avoid overprovisioning.

Adopt a Multi-Cloud Strategy: Leverage services from multiple providers to take advantage of the best pricing models and technologies each has to offer.

Leverage Automation: Automate routine and repetitive tasks to reduce manual overhead and minimize human errors that can lead to cost inefficiencies.

Participate in Capacity Planning: Use predictive analytics to better forecast demand and adjust resources preemptively to ensure that resource usage aligns with actual business needs.

Prioritize Security and Compliance: Ensure that cost optimization efforts do not come at the cost of security standards or regulatory compliance requirements.

Future research should include the implication of new technologies, such as artificial intelligence and blockchain, on cloud cost management. The new regulatory framework implications for cloud strategies, primarily in healthcare and finance, where compliance costs can be huge, are equally important for future studies. Further development of predictive analytics tools should be researched to make cost forecasting and resource allocation more accurate.