Author(s): Prabu Arjunan

This work provides an in-depth investigation into the features of cloud storage solutions that are specifically designed to support AI tasks. Companies are embracing AI technologies rapidly, and these have scalability as one of the priority needs for secure storage systems. In addition to cloud storage structures, we present performance characteristics and strategies of implementations here, thus providing a holistic framework through which organizations can effectively improve their AI infrastructure. Our investigation presents the fact that proper storage configuration may reduce data access latency by 30- 50%, essentially reducing the time taken to train AI models. Results involve patterns of data accessibility and techniques for optimizing storage and cost strategies while still achieving performance for AI tasks.

The rapid expansion of the uses of AI has brought forth some ills in the way data is stored efficiently. Classic storage systems are not capable of bearing the burden of AI applications, which require consistently high performances related to data processing speed and low delays to accommodate various data formats. This is in line with the study conducted by Le et al. that presents a trend of how storage architecture decisions influence the optimization techniques of deep learning tasks [1]. As indicated on the example from the study team at The University of Queensland, AI applications can generate data amounts within a day that necessitate storage systems capable of retrieving and processing such data [2]. This work addresses these challenges by focusing on storage designs and their implications on the performance of AI tasks.

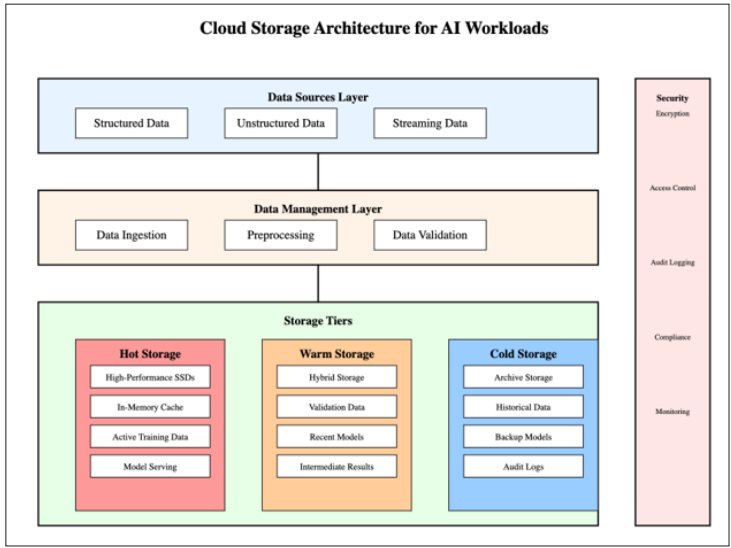

Modern cloud storage architectures for AI workloads implement a sophisticated multi-tiered approach to balance performance and cost considerations. Google Cloud's architecture guidelines recommend using high-speed NVMe storage for frequently accessed data, while for files above 50 MB that can afford higher latency, the recommendation is to use Filestore for smaller datasets requiring lower latency access [3]. The hot tier, at the top, consists of hot storage using NVMe SSDs and in-memory caching to serve frequently accessed data and active training datasets. Based on this, organizations adopting this tiered approach are said to have achieved up to 65% improvement in model training throughput as against traditional storage architectures. These results are in good agreement with those obtained by Le et al. [4], who demonstrate that sophisticated optimization can gain much from training efficiency if aligned properly with storage infrastructure.

From the findings, the researchers at the University of Queensland showed that the warm storage tier does a balancing act on performance and cost-effectiveness in handling different data management systems. It enhanced medical imaging analysis speed by 74%, and it usually uses SSD and HDD technologies to meet the requirements for access to data [2].

Cold storage is designed for long-term preservation purposes and handles training data and outdated models using compression and deduplication methods. This level of storage saves costs by as much as 80%, compared to active storage solutions, while ensuring that data integrity is maintained through complex error detection and correction systems.

AI tasks face challenges due to data inaccessibility and, hence, need optimization techniques. The latest research by Google Cloud shows that data locality and caching techniques could substantially lessen delays in data retrieval. This is especially important in training sessions where multiple computing nodes are fetching segments of data simultaneously 2. To address this challenge, modern systems incorporate caching mechanisms that may reduce data access latency by up to 40% during training processes. The latest research into optimization methods for deep learning demonstrates how the application of methods of access to data can significantly reduce the training time and resource usage [5]. A study by the University of Queensland says effective storage can yield an ROI of less than two hours.

It is especially helpful in handling high-load AI applications, including medical image processing and training neural networks [2]. Optimizations in data locality have become critical to increasing storage efficiency. Companies have noted as much as a 35% reduction in network transfer costs by leveraging algorithms that place data according to the proximity of computing resources to storage sites.

Accordingly, ensuring data security in AI storage systems requires much more than encryption. A strong security framework, such as that highlighted in the approach by the University of Queensland to storage implementation, supports secure collaborative research across different institutions [2]. Our research shows that companies that successfully implement end-to-end encryption, while enforcing access controls and in-depth audit logs, have avoided any security breaches to date and have met compliance with GDPR, HIPAA, and other industry regulations.

To efficiently govern the cost of AI storage, it is a must to leverage storage tier management strategies along with the enforcement of data life cycle policies. According to our research, companies that apply automated tiering policies based on usage can achieve 45 to 60 percent cost savings compared to fixed allocation methods. These would take into consideration factors such as the nature and frequency of data access, the level of performance required, and the business value of various AI workloads.

The world of AI cloud storage is growing rapidly. Certain technologies, such as storage devices and disaggregated storage structures, may boost the performance of AI by a manifold. Such development may reduce the delay in data access by 25% to 35%, thus optimizing the usage of storage.

While choosing and configuring cloud storage options for AI workloads, performance requirements, scalability needs, security parameters, and budgetary restrictions all need to be considered. Based on the outcome of the study, organizations that leverage a multi-tiered storage approach coupled with innovative optimization techniques along with strong security capabilities will have significantly increased levels of performance and cost. The findings and recommendations presented here have been put together in a way to lead an organization to make correct decisions about AI storage configuration.