Author(s): Pushkar Mehendale

ABSTRACT

Explainable Artificial Intelligence (XAI) aims to address the complexity and opacity of AI systems, often referred to as "black boxes." It seeks to provide transparency and build trust in AI, particularly in domains where decisions impact safety, security, and ethical considerations. XAI approaches fall into three categories: opaque systems that offer no explanation for their predictions, interpretable systems that provide some level of justification, and comprehensible systems that enable users to reason about and interact with the AI system. Automated reasoning plays a crucial role in achieving truly explainable AI. This paper presents current methodologies, challenges, and the importance of integrating automated reasoning for XAI. It is grounded in a thorough literature review and case studies, providing insights into the practical applications and future directions for XAI.

Artificial Intelligence (AI) systems based on deep learning and advanced machine learning techniques have made remarkable strides, achieving impressive results in various fields. However, these systems often lack transparency, making it challenging for users to comprehend their decision-making processes. This opacity can lead to distrust and skepticism, particularly in critical applications such as healthcare, finance, and autonomous systems. Explainable AI (XAI) emerges as a promising solution to address this issue, aiming to enhance the understandability of AI systems for humans [1].

The fundamental objective of XAI is to develop models that can offer clear and concise explanations for their actions and decisions. To accomplish this, XAI systems can be broadly categorized into three main types: opaque systems, interpretable systems, and comprehensible systems. Opaque systems, at one end of the spectrum, provide no insights into their internal mechanisms, leaving users in the dark about their decision-making processes [2]. Interpretable systems, on the other hand, allow for mathematical analysis of their operations, enabling a deeper understanding of their behavior. Comprehensible systems, occupying a middle ground, leverage emitted symbols or visualizations to provide user-driven explanations, bridging the gap between opaque and interpretable systems [3].

Beyond these three types of XAI systems, we introduce the concept of truly explainable systems. Truly explainable systems make a significant leap forward by integrating automated reasoning to generate human-understandable explanations without requiring extensive human interpretation. This type of XAI system is still in its nascent stages of development, but it holds immense potential to revolutionize our interactions with AI systems. Truly explainable systems can enhance trust, foster collaboration, and ultimately pave the way for more responsible and ethical AI applications [4, 5].

The demand for Explainable Artificial Intelligence (XAI) arises in domains where comprehending the AI decision-making process is vital. Healthcare is a prime example, as AI systems play a crucial role in diagnosing diseases and proposing treatments. Without explainability, medical professionals may hesitate to rely on these AI recommendations. Similarly, in finance, AI models employed for credit scoring or fraud detection require transparency to guarantee fairness and adherence to regulations [6].

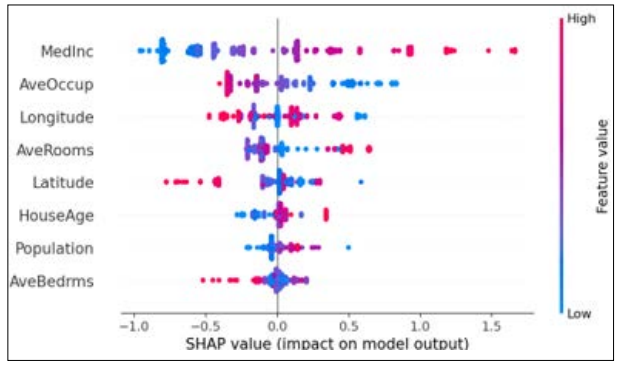

These approaches do not depend on the underlying model and can be applied to any AI system. Examples include LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations), which provide local explanations for individual predictions by approximating the model with a simpler, interpretable model around the prediction of interest [3, 4].

Figure 1: Example of SHAP Value Explanation [12].

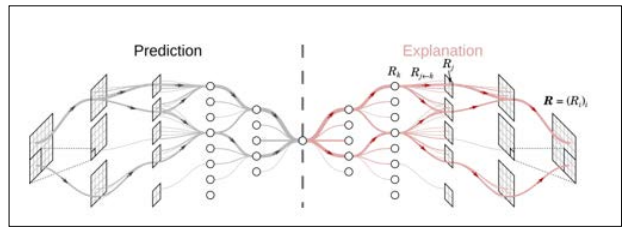

These approaches are tailored to specific types of models. For instance, decision trees and rule-based systems are inherently interpretable, while neural networks may use techniques like attention mechanisms, layer-wise relevance propagation, or gradient-based methods to provide explanations.

Figure 2: LRP Visualization Example [14].

Visual explanations, such as saliency maps and heatmaps, highlight the parts of the input that most influenced the AI's decision. Interactive tools allow users to query the AI system and receive explanations in real-time, fostering a more dynamic understanding of the model's behaviour.

There is often a trade-off between the complexity of the model and its interpretability. More complex models tend to be more accurate but harder to explain. Balancing these two aspects is a significant challenge in the development of XAI systems.

The interplay between the complexity of a model and its interpretability presents a fundamental challenge in the field of Explainable Artificial Intelligence (XAI) systems. The tension between accuracy and explainability is a critical issue that must be addressed to unlock the full potential of XAI [1].

On the one hand, more complex models often achieve higher levels of accuracy. This is because they can capture more nuanced patterns and relationships in the data. However, as models become more complex, they also become more difficult to understand and interpret. This can make it challenging for humans to understand how the model makes its decisions and to trust its predictions.

On the other hand, simpler models are generally easier to interpret. This is because they have fewer variables and relationships to consider. However, simpler models may not be able to capture the complexity of the data as well as more complex models [2]. This can lead to lower accuracy and less reliable predictions.

Balancing these two opposing factors is a significant challenge in the development of XAI systems. Researchers are exploring various approaches to address this challenge. These approaches include developing methods for explaining complex models in simpler terms, using visualization techniques to make models more transparent, and designing models that are inherently interpretable.

By addressing the trade-off between complexity and interpretability, XAI systems can become more powerful and accessible tools for understanding and interacting with artificial intelligence. This will enable us to develop more trustworthy and reliable AI systems that can be used to solve complex problems and make better decisions.

Measuring the quality of explanations is a complex and multifaceted task that requires consideration of multiple criteria. Several factors contribute to the effectiveness of an explanation, including completeness, correctness, comprehensibility, engagement, and actionability. Completeness ensures that all relevant aspects and details are covered, while correctness guarantees accuracy and freedom from errors. Comprehensibility makes the explanation easy to understand by presenting it in clear and familiar language. Engagement keeps the reader's attention through storytelling and examples, and actionability provides practical insights that can be applied to real-world situations.

In addition to these criteria, user studies often help evaluate the effectiveness of explanations. These studies measure factors like understanding, satisfaction, and the likelihood of using the information provided. Developing standardized metrics and evaluation frameworks is crucial for advancing the field and ensuring that explanations are of high quality and meet users' needs [15].

The field of explainable artificial intelligence (XAI) aims to make complex machine learning models more transparent and interpretable to humans. This is particularly important in domains where high-stakes decisions are made based on model predictions, such as healthcare, finance, and criminal justice. However, the requirements for explainability can vary significantly across different domains and user groups.

In the medical domain, for example, healthcare professionals often need detailed causal explanations of why a particular diagnosis or treatment decision was made. This is because medical decisions are often complex and involve a multitude of factors, and doctors need to be able to understand the rationale behind a model's predictions in order to make informed decisions. In contrast, end-users of a recommendation system might only need simple justifications for why a particular item or service was recommended to them. This is because they are typically not interested in the underlying details of the model, but rather just want to know whether the recommendation is trustworthy and relevant to their needs.

Understanding how humans perceive and interact with artificial intelligence (AI) explanations is crucial for designing effective AI systems. Cognitive biases, user expertise, and contextual factors all influence how explanations are received and understood. Research in human-computer interaction (HCI) and cognitive psychology is essential to designing explanations that are truly helpful to users. By studying how people process and evaluate explanations, researchers can create explanations that are clear, concise, and tailored to the user's needs. This can lead to improved trust, understanding, and decision-making when interacting with AI systems.

To achieve truly explainable AI, integrating automated reasoning with AI models is essential. This involves developing systems that can generate logical explanations based on the input data and the model's decision-making process. Such systems would go beyond merely interpreting or visualizing the model's output, providing coherent, human-understandable explanations.

Integrating causal reasoning into AI models can help provide explanations that not only describe what the model did but also why it made a particular decision. This approach can enhance the transparency and trustworthiness of AI systems by providing deeper insights into their behavior [16].

Future XAI systems should focus on enhancing human-AI collaboration by enabling users to interact with and query AI models. This interaction can lead to better understanding and trust, as users can explore the AI's reasoning process and validate its decisions in real-time.

Developing interactive tools that allow users to ask questions about the AI's decisions and receive explanations can improve transparency and trust. For example, a doctor could query an AI system about why it recommended a particular treatment, receiving detailed explanations that help verify the recommendation.

Ensuring that AI systems are ethical and fair is a critical aspect of XAI. Future research should focus on developing techniques to detect and mitigate biases in AI models and providing explanations that highlight potential ethical concerns.

AI systems can be designed to detect and mitigate biases in their decision-making process. Providing explanations that highlight potential biases and show how they were addressed can help ensure that the AI system operates fairly and ethically.

Explainable AI (XAI) aims to make AI systems more transparent and understandable to humans. This is crucial for building trust in AI systems and ensuring that they are used responsibly. There are several approaches to XAI, each with its own strengths and weaknesses. One common approach is to use post-hoc explanation methods, which generate explanations for AI decisions after they have been made. Another approach is to use interpretable models, which are designed to be inherently understandable by humans.

A key concept in XAI is the idea of fidelity. Fidelity refers to the degree to which an explanation reflects the actual behavior of the AI system. It is important for explanations to be both accurate and complete, in order to avoid misleading users. Another important concept in XAI is the idea of counterfactuals. Counterfactuals are hypothetical situations that are similar to the actual situation, but with one or more changes. By examining counterfactuals, we can gain insights into how the AI system would behave in different situations.

Despite the progress that has been made in XAI, there are still a number of challenges that need to be addressed. One challenge is the trade-off between fidelity and simplicity. Explanations that are highly accurate may be difficult to understand, while explanations that are simple may not be very accurate. Another challenge is the need to develop XAI methods that can be applied to a wide range of AI systems. Currently, most XAI methods are only applicable to specific types of AI systems.

Future research should focus on emerging challenges such as model drift, federated learning, and edge computing. Model drift occurs when a model's predictions become less accurate over time due to changes in the underlying data. Federated learning allows collaborative training of models across decentralized devices without sharing sensitive data, providing privacy-preserving ML opportunities. Edge computing brings computation closer to the data source, enabling real-time applications and resource- constrained environments.